Fundamentals

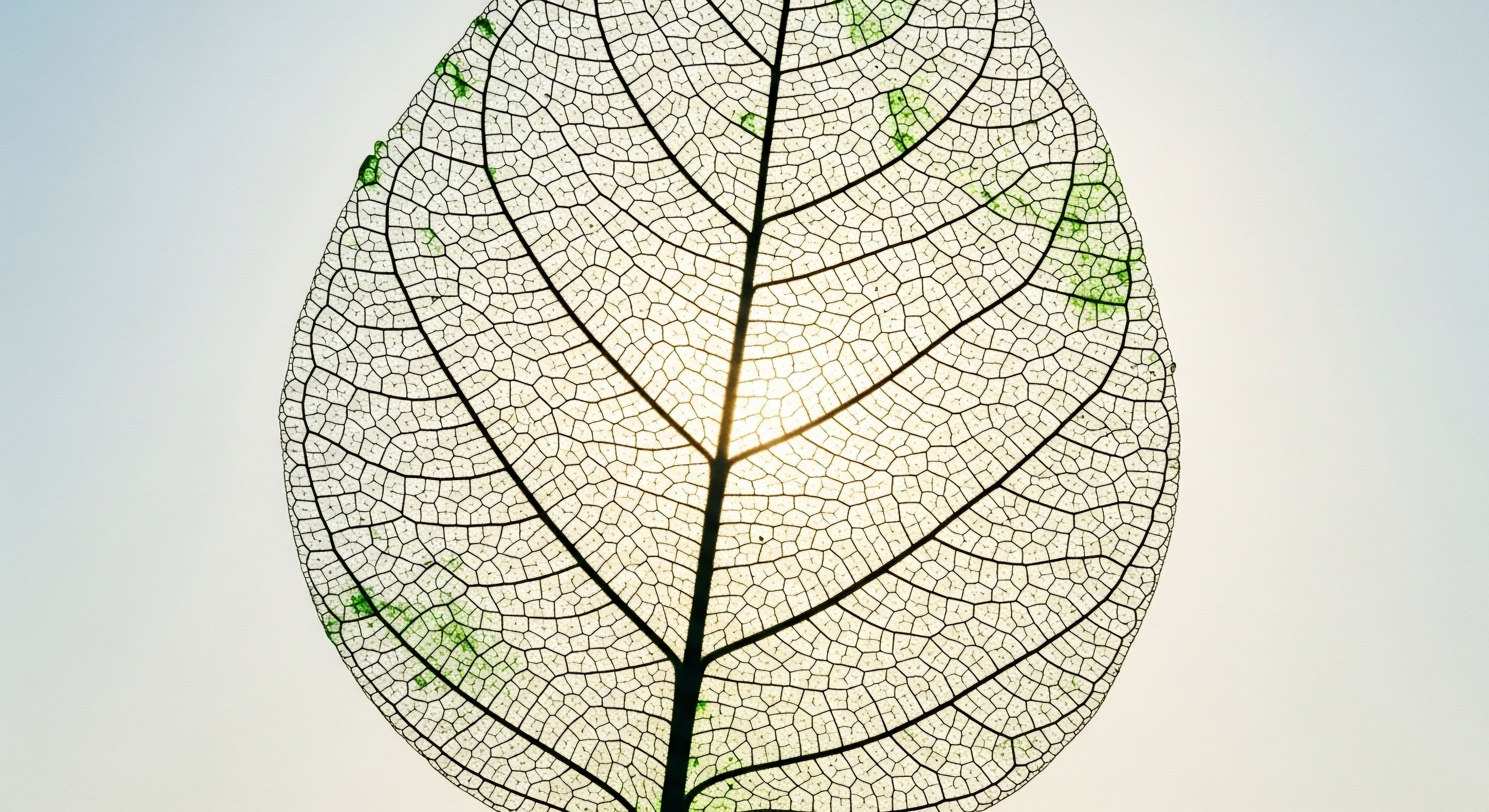

Your body is a source of deeply personal information. The rhythmic flux of your hormones, the subtle shifts in your metabolic function, and the very code written into your cells collectively form a biological narrative that is uniquely yours.

This is the data that explains why you feel the way you do ∞ the fatigue that settles in midafternoon, the changes in your sleep patterns, the fluctuations in mood and energy that define your daily experience. When you decide to work with a wellness provider, you are entrusting them with this story.

The question of how that story is protected, how your data is rendered anonymous, is therefore a foundational one. It is a query rooted in the understanding that your biological information is more than just numbers on a lab report; it is the blueprint of your vitality.

The process of making your health data anonymous begins with a principle of profound respect for its sensitivity. A wellness vendor dedicated to personalized health protocols recognizes that information about your testosterone, estrogen, progesterone, or thyroid levels is intimately tied to your sense of self, your health goals, and your lived experience.

The initial and most direct step in this process is called de-identification. This is a meticulous procedure designed to sever the link between your identity and your biological data. It is a deliberate and systematic stripping away of all personal details that could directly point back to you.

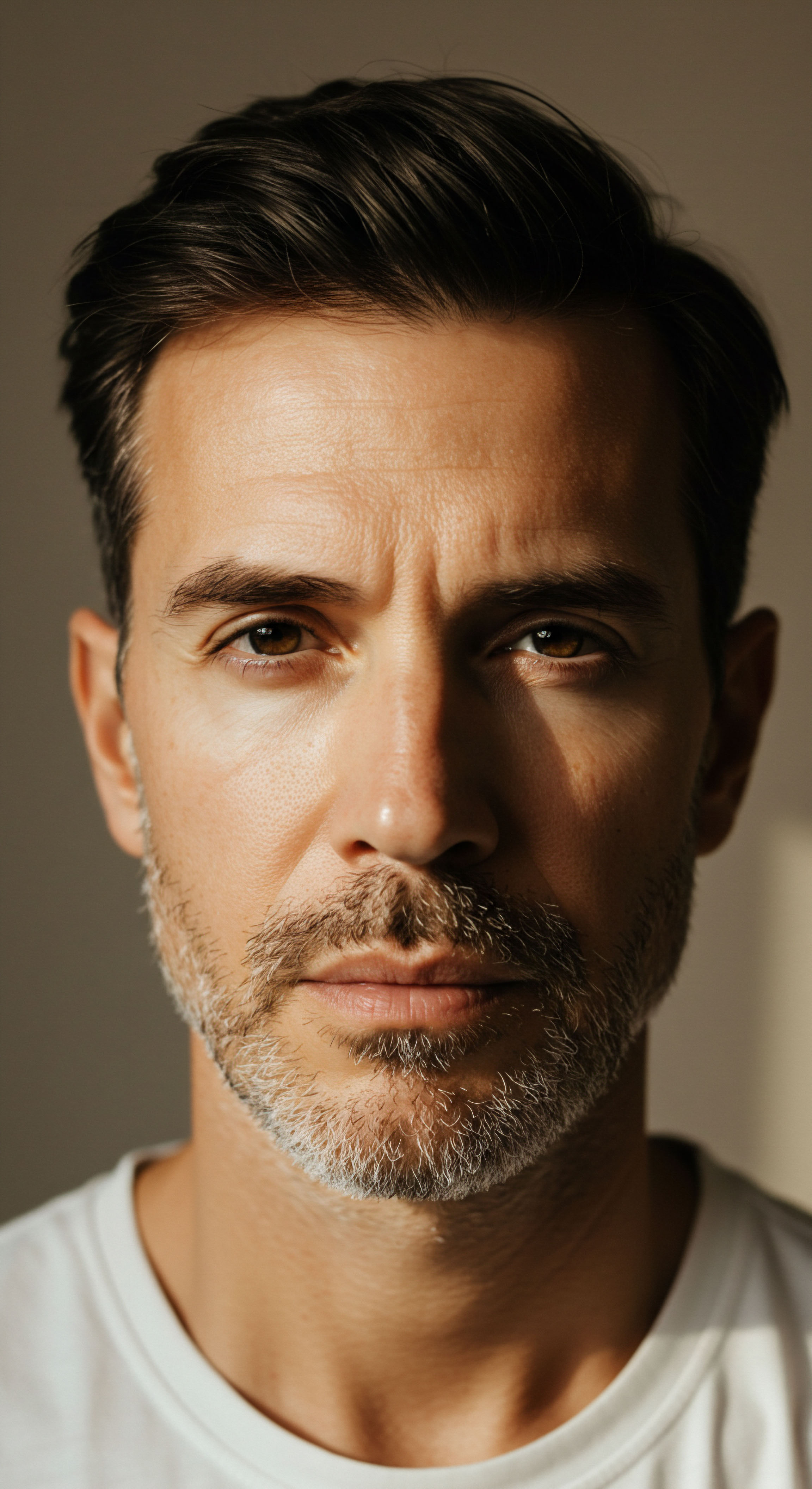

Your biological data tells a personal story of your health, and its protection begins by severing the link between that data and your identity.

Imagine your health profile as a detailed portrait. De-identification carefully paints over the features that make the face recognizable ∞ your name, your address, your date of birth, your phone number. The federal Health Insurance Portability and Accountability Act (HIPAA) provides a clear framework for this, known as the Safe Harbor method.

This method specifies 18 distinct identifiers that must be removed for data to be considered de-identified. These include the obvious, like names and social security numbers, but also more subtle details like vehicle serial numbers, IP addresses, or any photographic images.

By removing these direct identifiers, a vendor takes the first critical step in transforming your personal health information into a dataset that can be used for analysis without exposing your identity. This is the foundational act of creating a secure space where your biological narrative can be studied for your benefit, without compromising the privacy of the person living that story.

The Nature of Biological Data

The information gathered in a wellness context is uniquely revealing. A standard blood panel might measure your levels of free and total testosterone, estradiol, and sex hormone-binding globulin (SHBG). These markers do more than just diagnose a condition; they offer a window into your energy, libido, cognitive function, and emotional state.

Similarly, peptide therapies targeting growth hormone release, like Sermorelin or Ipamorelin, are monitored through markers like IGF-1. This data paints a picture of your body’s regenerative capacity and metabolic efficiency. It is a language of biological function, and its vocabulary is deeply personal.

Therefore, the imperative to anonymize this data is not merely a matter of regulatory compliance. It is an ethical commitment to honor the trust you have placed in a provider. The goal is to isolate the pure biological information ∞ the patterns, the correlations, the responses to treatment ∞ from the person to whom it belongs.

This allows clinical teams to analyze trends, refine protocols, and contribute to a broader understanding of human physiology, all while ensuring that your personal story remains yours alone. The initial de-identification is the first and most crucial step in a layered strategy of data stewardship.

What Is Pseudonymization?

Beyond simple de-identification, many advanced wellness vendors employ a technique called pseudonymization. If de-identification removes your name from the portrait, pseudonymization gives the portrait a unique, non-identifying code name. Your data profile is assigned a random, irreversible alphanumeric key. For example, your entire history ∞ lab results, protocol adjustments, subjective feedback ∞ is linked not to “John Smith,” but to an identifier like “A7B3-9K4P-C2X8”.

This technique offers a significant advantage. It allows the vendor to track your progress over time longitudinally. Your clinician can see how your testosterone levels responded to a change in protocol or how your metabolic markers improved after three months of a specific therapy. This continuity is essential for effective, personalized care.

Yet, for anyone outside the secure clinical environment, the code is meaningless. It is a key that only unlocks information within the trusted system, providing a powerful layer of protection that allows for both rigorous analysis and steadfast privacy. This method ensures that your health journey can be meticulously managed without ever attaching your public identity to the sensitive data that charts its course.

Intermediate

To truly secure your biological narrative, a wellness vendor must move beyond the foundational steps of de-identification and embrace more sophisticated statistical and computational methods. The core challenge is this ∞ even after direct identifiers like your name and address are removed, patterns within the remaining data could potentially be used to single you out.

These remaining fields, such as your age, zip code, and gender, are known as quasi-identifiers. If you are the only 45-year-old male in a specific zip code who has undergone a particular advanced peptide therapy, a combination of these quasi-identifiers could compromise your anonymity. Addressing this residual risk is the hallmark of a technically proficient and ethically committed organization.

This is where a family of privacy-enhancing technologies comes into play. These are not simple redactions but statistical transformations of the data itself, designed to make it impossible to isolate an individual record. The goal is to create ambiguity, to group your data with others in a way that provides plausible deniability.

Your individual data point becomes one of many, hidden in a carefully constructed crowd. This requires a deep understanding of the data’s structure and the potential ways it could be linked with external information sources. The process is an intricate balance between preserving the statistical integrity of the data for analysis and guaranteeing the privacy of each person who contributed to it.

Advanced anonymization techniques statistically transform data, intentionally creating ambiguity to ensure no single individual can be isolated from the group.

One of the most established methods in this domain is k-anonymity. The principle is straightforward yet powerful ∞ the dataset is modified so that for any given individual, there are at least ‘k-1’ other individuals who share the same set of quasi-identifiers.

If ‘k’ is set to 10, for example, any combination of age, gender, and zip code in the dataset would correspond to at least 10 different people. This is achieved through techniques like generalization (e.g. replacing a specific age of 47 with an age range of 45-50) and suppression (deleting a specific data point if it’s too unique).

By enforcing this ‘k’ threshold, a vendor makes it computationally difficult for an outside party to link a specific person to a specific record. Your data portrait is now blended into a group photo with at least ‘k-1’ similar-looking individuals, making you unidentifiable in the crowd.

Moving beyond K-Anonymity

While k-anonymity provides a strong baseline, it has limitations. Imagine a k-anonymous group of 10 individuals where all 10 happen to have the same sensitive information, for instance, they are all on a specific protocol for managing low testosterone. An adversary who knows you are in that dataset could infer your specific health details. This is called a homogeneity attack. To counter this, more advanced methods were developed.

L-diversity is the next logical step. This principle extends k-anonymity by requiring that each group of indistinguishable records (each equivalence class) contains at least ‘l’ distinct values for each sensitive attribute. So, in our group of 10, an l-diversity rule might require that there are at least 3 different types of treatment protocols represented. This introduces a vital layer of ambiguity around the sensitive data itself, making it much harder to draw specific inferences.

An even more refined method is t-closeness. This model recognizes that even with l-diversity, the distribution of sensitive values within a group might be skewed compared to the overall dataset, leaking information. For example, if the general population in the dataset has a 1% incidence of a particular condition, but a specific anonymous group has a 50% incidence, that’s a significant information leak.

T-closeness addresses this by requiring that the distribution of a sensitive attribute within any group is close (within a statistical distance ‘t’) to the distribution of that attribute in the entire dataset. This ensures that observing the data within a small group provides no significant new information beyond what is known from the dataset as a whole.

A Comparison of Anonymization Models

Understanding the progression of these models reveals a deepening commitment to data privacy. Each model builds upon the last to close potential vulnerabilities, creating a more robust shield for your personal health information. A sophisticated wellness vendor will select the appropriate model based on the sensitivity of the data and its intended use.

| Technique | Primary Goal | Mechanism of Action | Protects Against |

|---|---|---|---|

| K-Anonymity | Prevents re-identification via quasi-identifiers. | Ensures each record is indistinguishable from at least k-1 others by generalizing or suppressing data. | Linkage attacks using demographic or other quasi-identifying data. |

| L-Diversity | Prevents attribute disclosure within a group. | Requires that each group of k-anonymous records contains at least ‘l’ different sensitive values. | Homogeneity attacks, where all individuals in a group share the same sensitive attribute. |

| T-Closeness | Prevents inference based on data distribution. | Ensures the distribution of sensitive values in a group is statistically similar to their distribution in the overall dataset. | Skewness attacks, where the distribution of values in a group leaks information. |

How Do Legal Frameworks Guide These Technical Steps?

The technical methodologies for anonymization operate within robust legal and ethical frameworks. In the United States, HIPAA provides two primary pathways for a vendor to certify data as de-identified.

- The Safe Harbor Method ∞ This is a prescriptive approach. It involves the explicit removal of 18 specific types of identifiers. This is a checklist-based method that is straightforward to implement and verify. It is the baseline standard for de-identification.

- The Expert Determination Method ∞ This is a more flexible, risk-based approach. It allows a qualified statistician or data scientist to apply their expertise to assess whether the risk of re-identification is very small. This method is often used for complex datasets where simply removing the 18 identifiers might strip too much utility from the data. The expert can use sophisticated statistical models to analyze the dataset and attest that the likelihood of any individual being identified is acceptably low.

A trustworthy wellness vendor will have a clear policy detailing which method they use and why. Their internal governance will include data privacy experts who can navigate these regulations and select the most appropriate anonymization techniques to ensure that the handling of your sensitive hormonal and metabolic data meets the highest standards of both legal compliance and ethical responsibility.

Academic

The conventional frameworks of data anonymization, while foundational, are confronting a paradigm-shifting challenge at the intersection of high-dimensional biology and computational power. The very nature of the data collected for personalized wellness ∞ dense, longitudinal, and deeply interconnected ∞ creates a re-identification risk profile that transcends simple demographic linkage.

A dataset containing your complete hormonal cascade, metabolic markers, genomic single nucleotide polymorphisms (SNPs), and continuous glucose monitoring outputs represents a physiological signature of immense specificity. The academic and ethical frontier lies in confronting the reality that with sufficient data dimensionality, true and permanent anonymization may be an asymptotic goal, a limit that is approached but perhaps never fully reached.

This leads to a critical re-evaluation of risk. The classic threat model involves an adversary attempting to link a record in an anonymized health database to a named individual in a public record, like voter registration files. However, the modern threat landscape is more complex.

The “adversary” could be a machine learning model designed not to unmask a name, but to predict a future health outcome or insurance risk profile associated with a specific, albeit unnamed, individual. A 2019 study published in Nature Communications demonstrated that 99.98% of Americans could be correctly re-identified in any dataset using just 15 demographic attributes.

When one considers that a comprehensive wellness panel contains hundreds of biological attributes, the potential for creating a unique and therefore re-identifiable fingerprint becomes statistically profound. The conversation must therefore evolve from simple de-identification to a more sophisticated discourse on probabilistic privacy and computational safeguards.

In high-dimensional biological datasets, the potential for re-identification shifts from a simple linkage problem to a complex challenge of statistical inference and computational security.

This is where the concept of Differential Privacy (DP) emerges as the gold standard in privacy-preserving data analysis. Differential privacy is a mathematical definition of privacy that provides a provable guarantee. Its core idea is to ensure that the output of any analysis remains almost identical, whether or not any single individual’s data is included in the dataset.

This is typically achieved by injecting a carefully calibrated amount of statistical “noise” into the results of a query. The mechanism ensures that an observer of the output cannot reliably determine if your specific data contributed to the result, thus protecting your privacy.

The strength of this privacy guarantee is controlled by a parameter called epsilon (ε). A smaller epsilon means more noise and stronger privacy, while a larger epsilon means less noise, higher data utility, and a weaker privacy guarantee.

The Implementation of Differential Privacy

A wellness vendor committed to state-of-the-art data protection would implement differential privacy at the point of analysis. When their data science team queries the collective dataset to, for example, analyze the average IGF-1 response to a six-month Sermorelin protocol, the system would not return the exact raw average. Instead, it would return the average plus a small amount of random noise drawn from a specific mathematical distribution (often the Laplace or Gaussian distribution).

This process has profound implications:

- It moves protection from the dataset to the output ∞ Unlike k-anonymity, which modifies the dataset itself, differential privacy protects privacy by modifying the answers to questions asked of the dataset. The underlying data can remain in its original, high-fidelity form within a secure environment.

- It provides a tunable, mathematical guarantee ∞ The privacy loss (ε) is quantifiable. An organization can make a precise, auditable statement about the level of privacy it guarantees, creating a transparent contract with its users.

- It is resilient to post-processing ∞ A key theorem in differential privacy states that an analyst cannot weaken the privacy guarantee by performing any computation on the differentially private output. Once the protection is applied, it holds.

The challenge, however, lies in the inherent trade-off between privacy and accuracy. For a clinical organization, this is a delicate balance. The noise added to protect privacy must be small enough that it does not lead to incorrect clinical conclusions. Fine-tuning the epsilon value for different types of analyses ∞ from large-scale population research to fine-grained protocol optimization ∞ is a complex task that requires deep expertise in both data science and clinical science.

Advanced Cryptographic and Architectural Solutions

What happens when even the entity holding the data should not be able to see it in its raw form? This question pushes us to the frontier of cryptographic solutions that could fundamentally reshape data privacy in personalized medicine.

| Advanced Technique | Core Principle | Application in Wellness | Primary Advantage |

|---|---|---|---|

| Homomorphic Encryption | Allows computations to be performed on encrypted data without decrypting it first. | A vendor could analyze encrypted hormone levels to identify trends without ever accessing the raw, unencrypted values. | Provides the highest level of data security, as the data processor never sees the plaintext data. |

| Federated Learning | Trains a central machine learning model on decentralized data without the data ever leaving its source location. | A global model for predicting metabolic outcomes could be trained on data from thousands of users, but each user’s data remains on their local device (e.g. a smartphone app). Only the model updates are shared. | Minimizes data transfer and centralization, reducing the risk of large-scale data breaches. |

| Secure Multi-Party Computation (SMPC) | Enables multiple parties to jointly compute a function over their inputs while keeping those inputs private. | Multiple clinics could pool their data to calculate the efficacy of a new peptide protocol without any single clinic revealing its specific patient data to the others. | Enables collaborative research and analysis without a central trusted third party. |

The Enduring Challenge of Re-Identification Risk

Even with these advanced techniques, the risk of re-identification is a persistent, dynamic variable. It is not a static property of a dataset but is influenced by the ever-growing universe of publicly available information. The risk of linking an “anonymized” health record increases as more auxiliary datasets become available, from consumer purchasing habits to social media activity.

Regulatory bodies are beginning to acknowledge this, with guidance from organizations like Health Canada recommending a specific quantitative risk threshold (e.g. a 9% risk of re-identification) for data to be considered de-identified. This acknowledges that zero risk is an illusion; the goal is to reduce the risk to a quantifiable and acceptably low level.

A forward-thinking wellness vendor, therefore, treats anonymization not as a one-time event, but as a continuous process of risk management, constantly re-evaluating their datasets against the evolving external data landscape and adopting new technologies to stay ahead of emerging threats.

References

- El Emam, K. & Dankar, F. K. (2020). Protecting Privacy Using k-Anonymity. Journal of the American Medical Informatics Association, 27(11), 1824 ∞ 1830.

- Machanavajjhala, A. Kifer, D. Gehrke, J. & Venkitasubramaniam, M. (2007). l-Diversity ∞ Privacy Beyond k-Anonymity. ACM Transactions on Knowledge Discovery from Data, 1(1), 3.

- Li, N. Li, T. & Venkatasubramanian, S. (2007). t-Closeness ∞ Privacy Beyond k-Anonymity and l-Diversity. In 2007 IEEE 23rd International Conference on Data Engineering (pp. 106-115). IEEE.

- Office for Civil Rights (OCR). (2012). Guidance Regarding Methods for De-identification of Protected Health Information in Accordance with the Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule. U.S. Department of Health & Human Services.

- Rocher, L. Hendrickx, J. M. & de Montjoye, Y. A. (2019). Estimating the success of re-identifications in incomplete datasets using generative models. Nature Communications, 10(1), 3069.

- Dwork, C. (2008). Differential Privacy ∞ A Survey of Results. In Theory and Applications of Models of Computation (pp. 1-19). Springer Berlin Heidelberg.

- El Emam, K. (2015). Guide to the De-Identification of Personal Health Information. Auerbach Publications.

- Shokri, R. & Shmatikov, V. (2015). Privacy-Preserving Deep Learning. In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security (pp. 1310-1321).

- Narayanan, A. & Shmatikov, V. (2008). Robust De-anonymization of Large Sparse Datasets. In 2008 IEEE Symposium on Security and Privacy (pp. 111-125). IEEE.

- Jiang, X. et al. (2021). A Survey on Differential Privacy for Medical Data Analysis. IEEE Access, 9, 129255-129273.

Reflection

The journey to understand your own biology is profoundly personal. The data points that chart your hormonal fluctuations and metabolic responses are the language your body uses to communicate its needs, its strengths, and its vulnerabilities. The knowledge you have gained about how this language is protected is a critical component of your health literacy.

It forms the bedrock of the trust required to engage in a truly personalized wellness protocol. The methodologies, from the clear rules of Safe Harbor to the mathematical elegance of differential privacy, are more than technical procedures. They are the tangible expressions of a vendor’s commitment to honoring the sanctity of your biological story.

Consider now the information that constitutes your own health narrative. Think about the connection between the numbers on a lab report and the way you feel each day. This understanding of data stewardship is the first step. The next is to recognize that your path to vitality is unique.

The data is the map, but you are the navigator. The ultimate application of this knowledge is not simply to be a passive recipient of care, but to be an active, informed partner in the process. Your biology is your own, and the power to optimize it begins with the confidence that its story will be told with integrity, precision, and profound respect.