Fundamentals

The information you record in a wellness application is a direct transcript of your body’s internal dialogue. Each entry about sleep quality, mood fluctuations, menstrual cycle timing, or dietary choices contributes to a detailed digital portrait of your physiological state. This collection of data points forms a sensitive biological narrative, a story told by your endocrine and metabolic systems.

Understanding how this story is handled, stored, and shared is the first step in protecting your most private information. The privacy policy of an application serves as the legal contract governing the use of your biological narrative. Its clarity, or lack thereof, reveals the developer’s true posture toward your personal health data.

Your journey into personal wellness is deeply individual, a process of listening to your body’s signals to restore balance and vitality. When you use a digital tool to aid this process, you are entrusting it with the raw data of that experience.

The language used in a privacy policy is the primary indicator of how that trust will be honored. A policy written with dense, impenetrable legal jargon is an immediate signal of concern. Transparency is a clinical necessity for trust, and a document designed to confuse is failing that primary test.

It suggests that the business model may rely on activities you would not consent to if they were stated plainly. True consent requires comprehension, and a policy that obstructs comprehension is fundamentally flawed.

The Regulatory Landscape a Common Misunderstanding

A prevalent belief is that health information entered into any digital platform is protected by the Health Insurance Portability and Accountability Act (HIPAA). This understanding is incomplete. HIPAA’s protections apply specifically to “covered entities” which include your doctor, hospital, insurance company, and their direct business associates.

Most direct-to-consumer wellness and fitness apps exist outside this framework. The data you voluntarily provide to them is classified as consumer health information, which falls under a different and less stringent set of regulations. This distinction is the single most important concept to grasp. Your app data, which may feel as sensitive as your clinical records, operates within a separate legal reality, one that affords corporations significant latitude in how they use and monetize your information.

The data you provide to most wellness apps is not protected by the same laws that govern your official medical records.

This regulatory gap creates a landscape where the user must become their own advocate. The responsibility for safeguarding this data shifts from the established protections of the healthcare system to the individual’s ability to critically assess an application’s terms of service.

The absence of HIPAA’s umbrella means that the promises made within the privacy policy are the only binding commitments an app developer makes to you. Therefore, reading and understanding these documents is an act of biological self-advocacy. It is the digital equivalent of asking a clinician about the specifics of a treatment protocol. You are seeking to understand the benefits, the risks, and the long-term implications of sharing your body’s data.

What Are the First Signs of a Problematic Policy?

The initial assessment of a privacy policy begins with its accessibility and readability. A policy that is difficult to locate, buried deep within menus, or presented in a format that discourages reading is a primary red flag. Once located, the language itself provides the next layer of clues.

Policies that are excessively long and filled with convoluted sentences are designed to be agreed to, not understood. Look for clear, concise language that defines key terms. An honorable policy will explain what data is collected, why it is collected, and how it will be used in a way that a non-lawyer can comprehend.

Another immediate area of concern involves the permissions the application requests upon installation. An app’s functionality should align directly with the data it needs to access. A menstrual tracking app, for instance, has a legitimate need for calendar access. That same app likely has no functional requirement for access to your contacts, your microphone, or your photo library.

When an app requests permissions that extend far beyond its core purpose, it signals an intention to collect a wider spectrum of data than is necessary. This excess data can be used for a variety of secondary purposes, including building more detailed user profiles for marketing or sale. Scrutinizing these permission requests provides a clear, upfront indication of the app’s data collection philosophy.

- Vague Language ∞ Policies that use ambiguous terms like “for business purposes,” “with trusted partners,” or “to improve our services” without specific definitions are a significant concern. These phrases are catch-alls that can permit a wide range of data sharing activities without your explicit and informed consent. A trustworthy policy will define who these partners are and what specific services are being improved.

- Absence of a Policy ∞ An application that handles any form of personal data, especially health-related information, and does not provide a privacy policy is to be avoided entirely. This demonstrates a complete disregard for user privacy and legal standards, indicating that no rules govern their handling of your sensitive information.

- Overly Broad Consent ∞ Be cautious of clauses that ask for consent to future, unspecified uses of your data. Agreeing to such a term means you are giving the company a blank check to use your biological data in ways they have not yet determined. Consent should be specific, informed, and limited to the purposes articulated at the time of collection.

Intermediate

Moving beyond the initial impressions of a privacy policy requires a more granular analysis of its specific clauses and the legal architecture they create. The central issue revolves around the commodification of your biological data stream. Wellness apps often operate on a business model where the primary asset is the aggregated data of their users.

This data has immense value to third parties, including advertisers, pharmaceutical researchers, and data brokers. The privacy policy is the document that legally permits this transfer of value. A careful reading reveals the mechanisms through which your personal health narrative is transformed into a corporate asset.

The distinction between Protected Health Information (PHI) under HIPAA and consumer health data is the fulcrum on which this entire system pivots. Understanding this difference is essential to appreciating the risks involved. PHI is generated within a clinical context and is tied to a specific diagnosis, treatment, or payment for healthcare.

Consumer health data is information you generate and record yourself, outside of that clinical relationship. While the data points may be identical ∞ for example, logging a blood glucose reading ∞ their legal status is entirely different. The policy’s language will reveal how the app developer leverages this distinction.

Dissecting the Anatomy of Data Sharing Clauses

The section of a privacy policy dedicated to “Data Sharing” or “Third-Party Disclosures” is its functional core. This is where the company outlines the conditions under which your information will leave their servers and be transferred to other entities. The language here is often intentionally opaque, using broad categories instead of specific names.

A primary red flag is the mention of sharing data with “affiliates,” “partners,” or “successors.” Without a clear definition or a public list of these entities, this clause grants the company sweeping permission to share your data with a potentially vast network of other companies.

Another critical area is the use of data for “research and analysis.” While this may sound innocuous or even beneficial, the term can conceal a variety of activities. Legitimate academic research is one possibility. A more common application is internal product development. The most concerning interpretation involves the sale of aggregated, “de-identified” datasets to commercial entities.

These datasets are highly valuable for market analysis, drug development, and actuarial modeling by insurance companies. The policy should specify the nature of the research and provide you with an option to opt out of having your data included in such commercial datasets. The absence of such control is a significant warning sign.

| Data Type | Governing Regulation | Typical Custodian | Key User Protections |

|---|---|---|---|

| Protected Health Information (PHI) | HIPAA | Hospitals, Clinics, Insurers | Strict limits on use and disclosure; right to access and amend records. |

| Consumer Health Information | FTC Act, State Laws (e.g. CCPA) | Wellness Apps, Fitness Trackers | Protections based on company’s privacy policy; FTC protection against deceptive practices. |

| Genetic Information | GINA, HIPAA (if clinical) | Genetic Testing Companies, Research Databases | Prohibits use in health insurance and employment decisions. |

How Is De-Identified Data a Privacy Concern?

Many privacy policies state that while your personal data is protected, “de-identified” or “aggregated” data may be shared freely. This presents a sophisticated risk. De-identification is the process of removing direct identifiers, such as your name, email address, and phone number.

The two primary methods under HIPAA are the Safe Harbor method, which removes 18 specific identifiers, and the Expert Determination method. The data collected by wellness apps, which is not governed by HIPAA, may undergo a less rigorous process. The critical issue is that this de-identified data can often be re-identified.

Data that has been stripped of direct identifiers can often be re-linked to an individual by combining it with other available datasets.

The potential for re-identification arises from the uniqueness of your data signature. A combination of seemingly generic data points ∞ such as your zip code, date of birth, and a log of your symptoms ∞ can create a profile that is unique to you.

When a data broker purchases an “anonymized” dataset from a wellness app, they can cross-reference it with other datasets they possess, such as public records or consumer purchasing histories. This process, known as the mosaic effect, can successfully re-associate the de-identified health data with your actual identity. The promise of anonymity in this context is a technical illusion, and policies that rely heavily on this promise as a safeguard are exposing you to this advanced risk.

Data Retention and Your Right to Be Forgotten

A final, crucial element of any privacy policy is its statement on data retention. The document should clearly articulate how long your data is stored, both while you are an active user and after you delete your account. A significant red flag is a policy that states the company retains your data indefinitely or for a prolonged period after you have terminated your relationship with them. This practice extends your privacy risk long after you have stopped using the service.

Furthermore, the policy should provide a clear and accessible process for requesting the complete deletion of your data. This is often referred to as the “right to be forgotten,” a principle codified in regulations like the GDPR.

A policy that makes this process difficult, or that claims the right to retain perpetual backups or residual copies of your data, is not respecting your ownership of your biological information. Your control over your data should include the ability to revoke access and permanently erase your history from the company’s servers. Any ambiguity or obstruction in this area is a clear indication that the company views your data as their permanent asset, not as your personal property.

Academic

The analysis of privacy policies in wellness applications transcends a simple review of legal clauses; it requires a deep understanding of data science, endocrine physiology, and the economic incentives that drive the digital health market. At an academic level, the most profound risks are not explicitly stated in the policy but are enabled by its ambiguities.

These risks emerge from the application of advanced computational techniques to the high-dimensional biological data that users generate. The central threat is the creation of “inferred data” ∞ new, highly sensitive information about a user’s health status that is algorithmically derived from the primary data they provide. This process transforms a wellness app from a simple tracking tool into a powerful, unregulated diagnostic and surveillance engine.

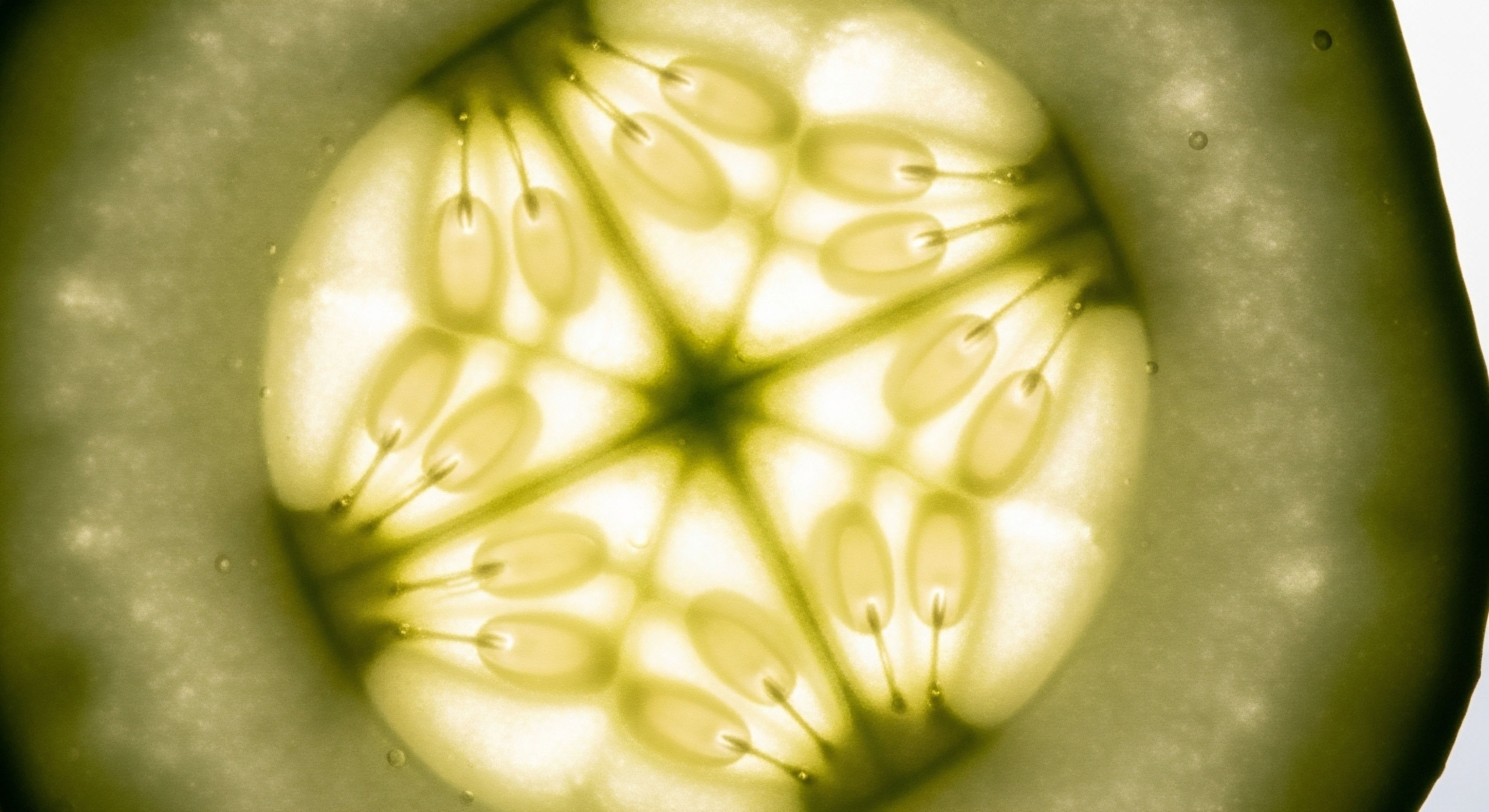

Consider the data stream from a comprehensive wellness app that tracks menstrual cycles, sleep patterns, heart rate variability (HRV), body temperature, mood, and dietary intake. Each of these inputs represents a noisy signal from a complex, interconnected physiological system. The menstrual cycle data reflects the state of the Hypothalamic-Pituitary-Gonadal (HPG) axis.

Sleep quality and HRV are indicators of autonomic nervous system function and the state of the Hypothalamic-Pituitary-Adrenal (HPA) axis. Dietary logs provide insight into metabolic function. A human clinician integrates these data points to form a holistic picture. A machine learning algorithm does the same, but with a scale, speed, and predictive power that is fundamentally different. The privacy policy is the legal gateway that permits this powerful analytical machinery to be pointed directly at your biology.

The Physiology of Inferred Data Generation

The generation of inferred data is a process of pattern recognition in time-series data. An algorithm trained on millions of user datasets learns to identify the subtle, multi-variable signatures that precede specific physiological events or correspond to chronic conditions.

For example, a consistent shortening of the luteal phase, combined with increased reports of sleep disturbance and elevated resting heart rate, could be identified by a model as a high-probability indicator for the onset of perimenopause. The user has not explicitly stated this; she has only logged her daily observations. The algorithm, however, has made a powerful inference, creating a new, unverified, and highly sensitive data point ∞ “likely perimenopausal.”

This same principle applies across a spectrum of endocrine and metabolic conditions. Irregular cycles combined with specific dietary patterns might be flagged as indicative of Polycystic Ovary Syndrome (PCOS). A decline in HRV, coupled with increased cravings for high-glycemic foods and reports of fatigue, could be algorithmically associated with insulin resistance or metabolic syndrome.

These are not simple correlations. These are sophisticated probabilistic models that generate new knowledge about an individual. The privacy policy, in its vague allowance for data to be used “for research” or “to improve our services,” provides the legal cover for the creation and subsequent monetization of this inferred data. The policy governs the inputs you provide, but it also silently governs the outputs the company’s algorithms create about you.

| Input Data Points | Physiological Axis Implicated | Potential Inferred Condition | Associated Privacy Risk |

|---|---|---|---|

| Cycle length variability, sleep disruption, mood logs, temperature shifts. | Hypothalamic-Pituitary-Gonadal (HPG) Axis | Perimenopause, Anovulation, Luteal Phase Defect | Targeted advertising for hormonal therapies; potential for age-related discrimination. |

| Heart Rate Variability (HRV), sleep latency, reported stress levels, caffeine intake. | Hypothalamic-Pituitary-Adrenal (HPA) Axis | Chronic Stress, Adrenal Maladaptation, Burnout | Targeted ads for stress-reduction products; data could be of interest to employers or insurers. |

| Dietary logs (macros), exercise logs, reported energy levels, weight changes. | Metabolic / Endocrine System | Insulin Resistance, Metabolic Syndrome, Thyroid Dysfunction | Insurance premium adjustments; discriminatory marketing of foods and health plans. |

The Failure of De-Identification at a Molecular Level

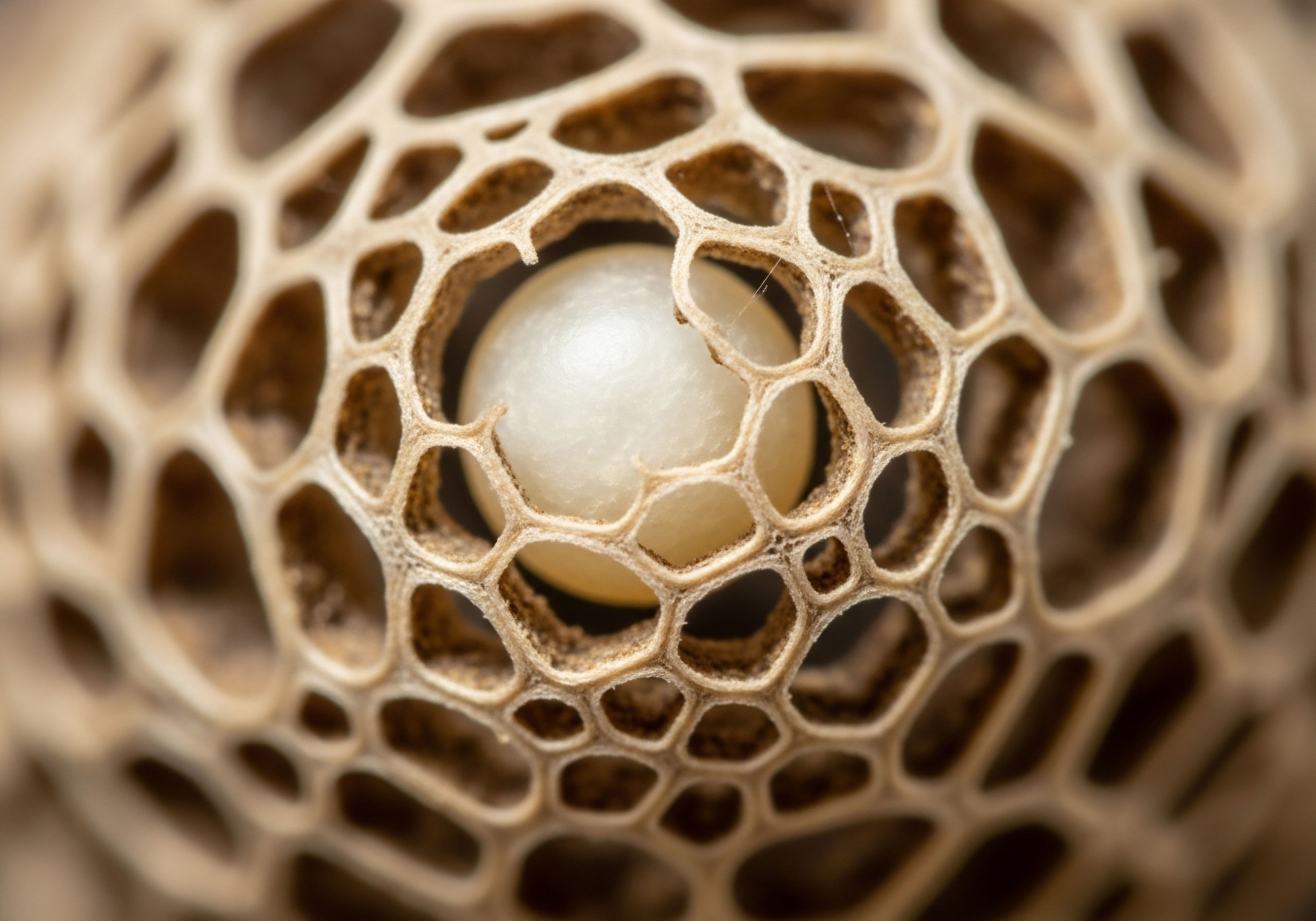

The concept of de-identification, often presented as a robust privacy safeguard, fails completely when confronted with high-dimensional biological data. The uniqueness of an individual’s physiology, when tracked over time, becomes a “biometric fingerprint.” Traditional de-identification focuses on removing demographic identifiers. Advanced re-identification, however, can use the patterns within the physiological data itself.

A sufficiently long and detailed record of a person’s menstrual cycle, with its unique pattern of follicular and luteal phase lengths, can be as identifying as a fingerprint. When this “temporal biomarker” is combined with location data or other metadata, the probability of re-identification approaches certainty.

This means that even when a company shares a “fully anonymized” dataset with a third party, they may be transferring a dataset that is trivially re-identifiable by a motivated actor with access to correlative data. The promise in the privacy policy that “your personal information will not be shared” is technically true, as the name and email were removed.

The functional reality is that your entire health history, now linked to a new identifier, has been transferred. The policy’s language is constructed to be legally defensible in a world that predates this type of data science. It fails to account for the fact that in a biological context, the data itself is the identifier.

Your unique physiological patterns, when tracked over time, can become a form of biometric identifier that renders traditional de-identification methods obsolete.

The downstream consequences of this reality are significant. These re-identified or easily identifiable datasets can be used to build “shadow health profiles” on individuals, held by data brokers. These profiles can be sold to insurance companies to inform risk modeling, to employers for hiring decisions, or to financial institutions to assess creditworthiness.

A woman whose app data has been used to infer a high-risk pregnancy could, in a dystopian but technically feasible future, be targeted with specific advertising, see her insurance premiums rise, or even face employment discrimination. The privacy policy’s failure to address the potential for re-identification through biological pattern analysis is its most profound and academically significant red flag. It demonstrates a fundamental disconnect between the legal language of privacy and the technical reality of modern data science.

References

- Zuboff, Shoshana. The Age of Surveillance Capitalism ∞ The Fight for a Human Future at the New Frontier of Power. PublicAffairs, 2019.

- O’Malley, C. et al. “Privacy Assessment in Mobile Health Apps ∞ Scoping Review.” Journal of Medical Internet Research, vol. 22, no. 7, 2020, e18868.

- Grundy, Q. et al. “Data sharing practices of medicines-related apps and the mobile ecosystem ∞ a systematic assessment.” BMJ, vol. 364, 2019, l920.

- Garfinkel, Simson L. et al. “De-identifying government datasets.” National Institute of Standards and Technology, 2015.

- Shah, Nigam H. and Larrimore, C. Jason. “De-Identifying Medical Patient Data Doesn’t Protect Our Privacy.” Stanford University Human-Centered Artificial Intelligence, 2021.

- Sunyaev, Ali. “Health Insurance Portability and Accountability Act (HIPAA).” Internet Computing, Springer, 2020, pp. 115-121.

- Papageorgiou, G. et al. “On the privacy of mental health apps ∞ An empirical investigation and its implications for app development.” Information Systems Frontiers, vol. 25, no. 5, 2023, pp. 1833-1854.

- Warner, M. et al. “It’s Not Just Your Period ∞ A Critical Evaluation of Female Health App Privacy Policies.” Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, 2024.

Reflection

You began this journey of wellness by listening to your body. You sought tools to help you translate its whispers, to find patterns in the noise, and to reclaim a sense of agency over your own physiological systems. The knowledge of how your digital biological narrative is treated is now a part of that process.

It adds a new dimension to your self-advocacy. This understanding is not a cause for fear, but a call for discernment. It transforms your relationship with technology from one of passive consumption to one of active, informed partnership. As you move forward, consider how each digital tool you engage with either honors or exploits the trust you place in it. Your health journey is your own; the data it generates should be as well.