Fundamentals

Your participation in a wellness program generates a stream of deeply personal information. You trust that when this data is “anonymized,” your identity is safely severed from the numbers on a screen. This process is presented as a straightforward act of removing your name, address, and other direct identifiers, creating a dataset that can be used for research or program improvement without compromising your privacy.

The core idea is that by stripping away these explicit labels, your personal health information dissolves into a sea of statistics, rendered anonymous and untraceable. This is the foundational promise upon which the exchange of your data for personalized insights is built.

The lived experience of engaging with a health program, tracking metrics, and receiving feedback is immediate and personal. The subsequent transformation of this data into an anonymous form is, by contrast, an abstract concept. It relies on a belief in technical safeguards that are rarely seen or understood by the participant.

You provide information that feels uniquely yours ∞ sleep patterns, heart rate variability, daily steps, hormonal panel results ∞ and in return, you are assured of its depersonalization. The integrity of this process is the bedrock of the trust between you and the program provider, allowing for the aggregation of data that fuels broader insights while aiming to protect the individual sources from which it came.

The Nature of Digital Health Information

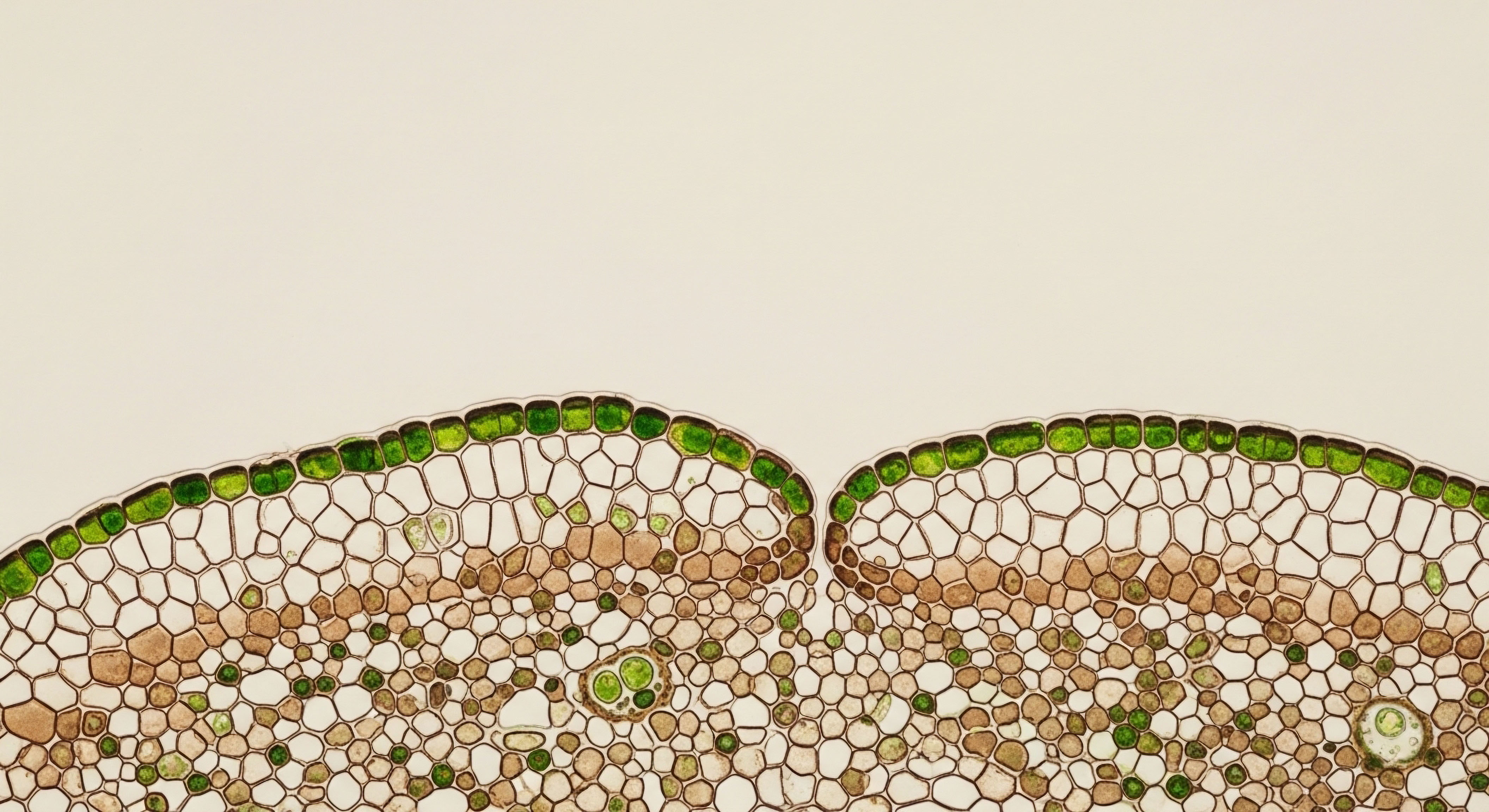

Every interaction with a digital wellness platform creates a data point. These are not just isolated numbers; they are digital footprints that, when viewed together, form a detailed map of your physiological and behavioral patterns. This dataset includes everything from the time you wake up to the fluctuations in your metabolic markers.

The richness of this information is what makes it valuable for both you, in receiving personalized guidance, and for the wellness program, in refining its algorithms and understanding population health trends. The challenge arises because the very detail that makes the data useful also makes it unique. Your specific combination of habits, biomarkers, and lifestyle choices creates a signature that can be distinct even without your name attached.

The uniqueness of your health data is a double-edged sword, providing personalized value while creating potential pathways to re-identification.

The process of anonymization attempts to obscure this signature. It involves techniques that go beyond simply deleting your name and social security number. Data aggregation, for example, combines your information with that of others to report on group averages. Data masking replaces sensitive information with realistic but false data.

These methods are designed to break the direct link between the dataset and your identity, allowing for its use in analytics and research. The effectiveness of these techniques is a central question in the ongoing dialogue about health data privacy. It is a constant calibration between maintaining the utility of the data for scientific purposes and upholding the privacy of the individuals who contributed it.

What Makes Health Data so Sensitive?

Health data is a uniquely sensitive category of personal information. It contains details about your physical and mental well-being, genetic predispositions, and lifestyle choices that are intensely private. The disclosure of this information can have significant personal and social consequences. For this reason, it is afforded special protection under regulations designed to govern its use and sharing.

The goal of anonymization is to allow for the beneficial use of this data in advancing medical knowledge and improving health outcomes while mitigating the risks of exposing such personal details. This balance is delicate and requires a deep understanding of both the technical methods of de-identification and the ethical principles that guide the handling of personal health information.

The journey of your data from a personal input to an anonymized statistic is a complex one. It begins with your engagement with the wellness program, generating a rich stream of personal health information. This data is then subjected to a series of technical processes designed to strip it of direct identifiers and obscure its unique patterns.

The resulting dataset, now considered “anonymous,” can be used for a variety of purposes, from internal program development to external research collaborations. Understanding this lifecycle is the first step in appreciating both the potential benefits of sharing health data and the inherent challenges in ensuring its complete and permanent anonymization.

Intermediate

The regulatory landscape governing health data in the United States is anchored by the Health Insurance Portability and Accountability Act (HIPAA). Its Privacy Rule establishes the standards for protecting individuals’ medical records and other identifiable health information.

Central to this is the concept of “de-identification,” a process by which personal identifiers are removed from health information, rendering it no longer Protected Health Information (PHI) and thus not subject to the Privacy Rule’s restrictions. HIPAA outlines two specific pathways to achieve this state of de-identification, each with its own methodology and level of rigor.

These methods provide a legal and technical framework that covered entities, including some wellness programs, must follow to use health data for secondary purposes like research or analytics.

Understanding these two methods is essential to grasping the mechanics of data anonymization in a clinical or wellness context. They represent a fork in the road for any organization handling sensitive health data. One path is prescriptive and checklist-driven, offering a clear set of instructions.

The other is principles-based, relying on statistical expertise to assess and mitigate the risk of re-identification. The choice between these methods often depends on the nature of the data itself and its intended use, balancing the need for data utility with the obligation to protect individual privacy.

The Two Pillars of HIPAA De-Identification

The HIPAA Privacy Rule provides two distinct methods for the de-identification of data ∞ the Safe Harbor method and the Expert Determination method. Each approach has a different mechanism for removing the link between the data and the individual, and the choice of method has significant implications for the resulting dataset’s characteristics and potential uses.

The Safe Harbor Method a Prescriptive Approach

The Safe Harbor method is a highly structured approach that functions like a checklist. It requires the removal of 18 specific types of identifiers from the data. If an organization can confirm that all 18 identifiers have been removed, the resulting data is considered de-identified. This method is popular due to its clarity and ease of implementation. It does not require statistical expertise to apply; it is a matter of systematically scrubbing the dataset of the specified fields.

The Safe Harbor method achieves de-identification by removing a specific list of 18 personal identifiers from a dataset.

However, the prescriptive nature of the Safe Harbor method can also be a limitation. The removal of certain identifiers, such as dates of service or specific geographic locations, can significantly reduce the analytical value of the data. For research that requires temporal analysis or fine-grained geographic information, the Safe Harbor method may render the dataset unusable. It is a trade-off between the certainty of compliance and the utility of the information.

- Direct Identifiers ∞ Names, telephone numbers, and social security numbers are among the most obvious identifiers removed.

- Geographic Data ∞ All geographic subdivisions smaller than a state, including street address, city, county, and zip code, are removed.

- Dates ∞ All elements of dates (except year) directly related to an individual, including birth date, admission date, and discharge date, must be removed.

- Biometric Identifiers ∞ Finger, retinal, and voice prints are also on the list of identifiers to be removed.

The Expert Determination Method a Risk-Based Approach

The Expert Determination method offers a more flexible and nuanced alternative to Safe Harbor. Under this method, a person with appropriate knowledge of and experience with generally accepted statistical and scientific principles and methods for rendering information not individually identifiable makes a determination that the risk of re-identification is “very small.” This expert applies statistical or scientific principles to the dataset and documents the methods and results of their analysis.

The definition of “very small” is not explicitly quantified in the regulation, allowing the expert to consider the context, including the intended recipient of the data and the environment in which it will be used.

This approach allows for the retention of some data elements that would otherwise be removed under Safe Harbor, such as specific dates or more detailed geographic information, provided the expert concludes that the overall risk of re-identification remains very low. This can make the resulting dataset far more valuable for research and analysis. The trade-off is the need for specialized expertise and the assumption of a greater responsibility for assessing and managing the residual risk of re-identification.

| Feature | Safe Harbor Method | Expert Determination Method |

|---|---|---|

| Methodology | Removal of 18 specific identifiers | Statistical analysis of re-identification risk |

| Flexibility | Low; prescriptive and rigid | High; allows for context-specific risk assessment |

| Data Utility | Can be limited due to removal of key data points | Generally higher, as more data can be retained |

| Required Expertise | None; follows a checklist | Requires a qualified expert in statistical analysis |

Academic

The traditional models of data de-identification, codified in regulations like HIPAA, were conceived in an era of siloed datasets and limited computational power. Today, the digital landscape is fundamentally different.

The proliferation of publicly available information, from social media profiles to voter registration lists, combined with the advent of sophisticated machine learning algorithms, has created an environment where the risk of re-identification is not static but dynamic and escalating. The concept of a truly and permanently anonymous dataset is becoming a statistical improbability.

This reality necessitates a shift in our approach to data privacy, moving from a reliance on simple data scrubbing to the adoption of more mathematically rigorous and provable privacy frameworks.

This new paradigm is built on the understanding that privacy is not an absolute state but a quantifiable measure of risk. The central challenge is to enable the use of valuable health data for research and innovation while providing strong, mathematically grounded guarantees of privacy to the individuals who contribute that data.

This has led to the development of advanced techniques like differential privacy, which represents a fundamental departure from the traditional anonymization playbook. It is a move from a deterministic to a probabilistic approach to privacy, one that acknowledges the inherent risks of data linkage and seeks to manage them with mathematical precision.

Beyond Anonymization the Rise of Differential Privacy

Differential privacy is a mathematical framework that aims to provide a strong guarantee of privacy by adding a carefully calibrated amount of “noise” to a dataset. The core idea is that the output of any analysis performed on a differentially private dataset should be essentially the same, whether or not any single individual’s data is included in that dataset.

This provides a powerful form of plausible deniability; an individual’s presence in the dataset cannot be confidently inferred from the results of any query. This is a much stronger guarantee than that offered by traditional anonymization techniques, which are vulnerable to linkage attacks where an “anonymized” dataset is cross-referenced with other available information to re-identify individuals.

Differential privacy protects individual data by adding mathematical noise, ensuring that query results are not significantly altered by the inclusion or exclusion of any single person’s information.

The implementation of differential privacy involves a “privacy budget,” which is a measure of how much privacy is lost with each query run on the dataset. Each query “spends” a portion of the privacy budget, and once the budget is exhausted, no more queries can be run.

This mechanism provides a quantifiable and enforceable limit on the potential for privacy loss. While differential privacy offers a robust solution to the problem of re-identification, it is not without its trade-offs. The addition of noise can impact the accuracy of the data, and there is a constant tension between the level of privacy provided and the utility of the dataset for analysis.

The Special Case of Genomic Data

Genomic data represents a unique and formidable challenge to data privacy. Your genome is, by its very nature, the ultimate identifier. It is inherited by your relatives, stable over your lifetime, and contains a vast amount of information about your health, ancestry, and physical traits.

Unlike other forms of health data, it cannot be easily or meaningfully “anonymized” in the traditional sense. Even a small subset of your genetic markers can be used to uniquely identify you. The increasing popularity of direct-to-consumer genetic testing and the growth of public genealogical databases have created a vast, interconnected network of genetic information that can be used to re-identify individuals in supposedly anonymous research datasets.

Research has demonstrated that it is possible to identify an individual within a “de-identified” genomic dataset by cross-referencing it with public genetic genealogy databases. By finding relatives who have publicly shared their genetic information, it is possible to triangulate the identity of an anonymous research participant.

This has profound implications for the future of genomic research and the promises of privacy made to participants. It suggests that for genomic data, the traditional model of de-identification is insufficient. New models of data governance, such as controlled-access data enclaves and advanced cryptographic techniques, will be necessary to enable the continued use of this vital data for scientific discovery while respecting the privacy of the individuals from whom it originates.

| Concept | Description | Application in Health Data |

|---|---|---|

| k-Anonymity | A property of a dataset where each individual is indistinguishable from at least k-1 other individuals. | Used to reduce the risk of re-identification by ensuring that individuals are part of a larger group. |

| Linkage Attack | A method of re-identification that involves combining an anonymized dataset with other available information to uncover individual identities. | A primary threat to the privacy of health data, especially with the growth of public datasets. |

| Differential Privacy | A mathematical framework for providing privacy guarantees by adding noise to a dataset. | Offers a more robust alternative to traditional anonymization, especially for large and complex datasets. |

| Genomic Privacy | The set of challenges and techniques related to protecting the privacy of an individual’s genetic information. | A rapidly evolving field that requires new approaches beyond traditional de-identification. |

References

- El Emam, K. & Dankar, F. K. (2008). Protecting privacy using k-anonymity. Journal of the American Medical Informatics Association, 15(5), 627 ∞ 637.

- Erlich, Y. & Narayanan, A. (2014). Routes for breaching and protecting genetic privacy. Nature Reviews Genetics, 15(6), 409 ∞ 421.

- Gymrek, M. McGuire, A. L. Golan, D. Halperin, E. & Erlich, Y. (2013). Identifying personal genomes by surname inference. Science, 339(6117), 321 ∞ 324.

- Ohm, P. (2010). Broken promises of privacy ∞ Responding to the surprising failure of anonymization. UCLA Law Review, 57, 1701.

- Shringarpure, S. S. & Bustamante, C. D. (2015). Privacy and security risks in genetic research. Science, 349(6255), 1456 ∞ 1457.

- Sweeney, L. (2002). k-anonymity ∞ A model for protecting privacy. International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems, 10(05), 557-570.

- U.S. Department of Health & Human Services. (2012). Guidance Regarding Methods for De-identification of Protected Health Information in Accordance with the Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule.

- Dwork, C. & Roth, A. (2014). The algorithmic foundations of differential privacy. Foundations and Trends in Theoretical Computer Science, 9(3 ∞ 4), 211 ∞ 407.

- Malin, B. & Sweeney, L. (2004). How to re-identify survey respondents with public records. Proceedings of the 2004 ACM symposium on Applied computing, 1277-1281.

- Homer, N. Szelinger, S. Redman, M. Duggan, D. Tembe, W. Muehling, J. & Craig, D. W. (2008). Resolving individuals contributing trace amounts of DNA to highly complex mixtures using high-density SNP genotyping microarrays. PLoS genetics, 4(8), e1000167.

Reflection

The information presented here provides a map of the landscape of data privacy in the context of your health journey. It details the methods, regulations, and emerging challenges that define the effort to protect your personal information. This knowledge is a tool, a lens through which you can view your participation in any wellness program with greater clarity.

It shifts the dynamic from one of passive trust to one of informed engagement. The path forward in personal health is one of partnership, not just with clinicians and coaches, but with the very technology that facilitates your progress. Understanding the lifecycle of your data is a critical part of that partnership.

Your health is a dynamic, evolving system, and your relationship with your health data should be as well. This exploration is a starting point. It invites you to consider the nature of the information you generate and the terms under which you share it.

The ultimate goal is to move through your wellness journey with a sense of agency, equipped with the understanding necessary to make conscious choices about your data and your privacy. The power to reclaim vitality and function is deeply intertwined with the power that comes from this understanding. Your journey is your own, and the knowledge of how your story is recorded, protected, and used is an integral part of shaping its outcome.