Fundamentals of Data Stewardship in Wellness

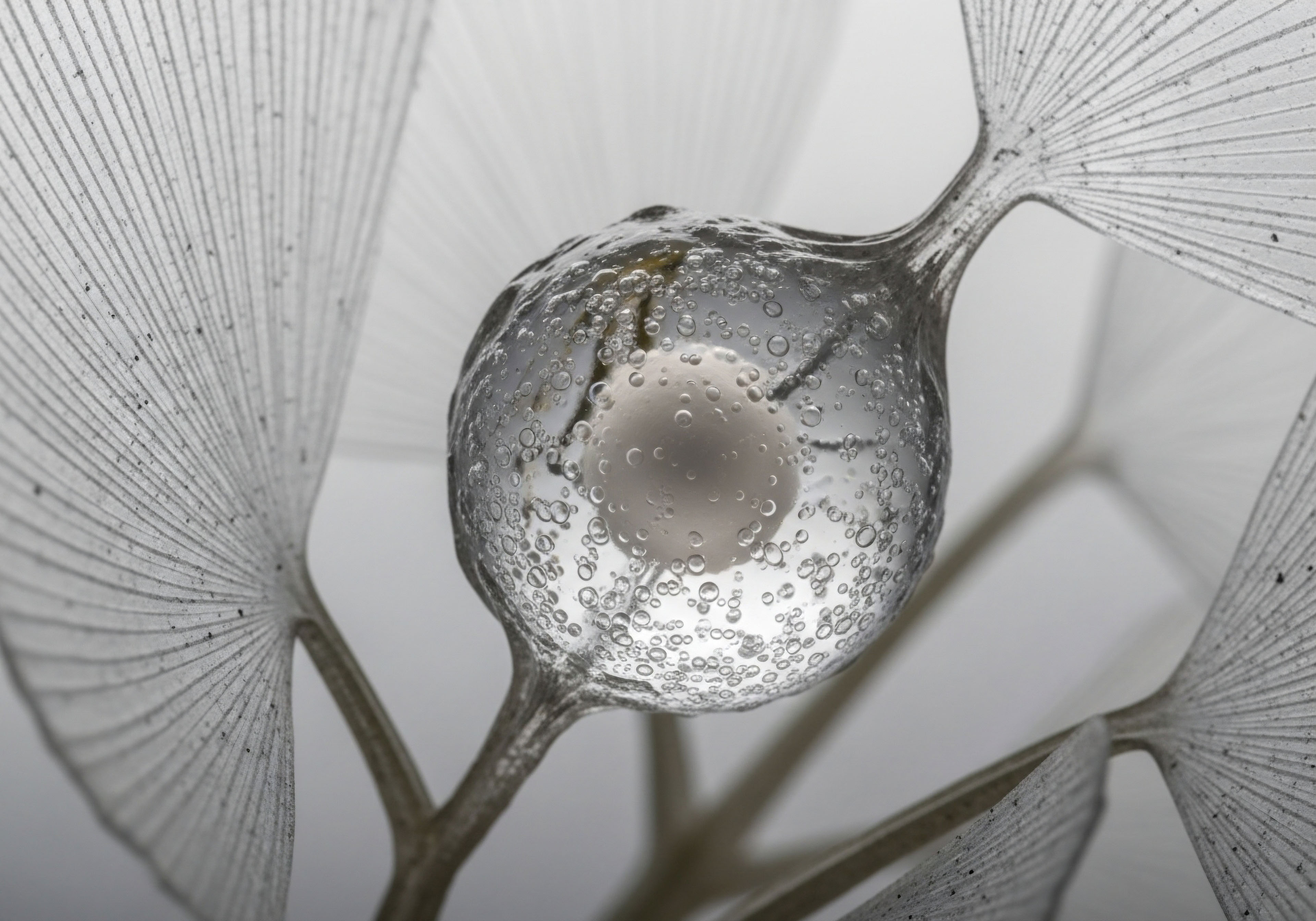

Your personal health journey, marked by the intricate dance of hormones and metabolic rhythms, generates data reflecting your unique biological narrative. This intimate information, from precise hormone levels to subtle metabolic shifts, holds immense potential for understanding your body and guiding personalized wellness protocols.

Entrusting this data to a wellness vendor necessitates a profound understanding of how they safeguard your most sensitive biological blueprint. The claims of data de-identification and anonymization represent a fundamental contract of trust, ensuring your insights contribute to collective knowledge without compromising your individual sovereignty over health narratives.

Consider your health data as a finely detailed map of your internal landscape. Data de-identification involves transforming this map, removing or altering specific landmarks that directly point to your identity. This process allows the overarching geographical patterns and trends to remain visible, offering valuable insights into population health or the efficacy of various wellness strategies.

Anonymization takes this transformation further, aiming to completely sever any links between the data and you, rendering re-identification practically impossible. Both processes serve as essential guardians of your privacy, enabling the beneficial use of health information while protecting your personal space.

Understanding how your unique biological data is de-identified and anonymized forms a cornerstone of trust in your personalized wellness journey.

The core principle behind these protective measures involves a delicate balance ∞ preserving the utility of the data for scientific inquiry and personalized guidance, while simultaneously eliminating the risk of individual recognition.

When a wellness vendor claims robust de-identification, they are asserting a commitment to this balance, suggesting that the insights derived from your hormonal and metabolic profiles contribute to a larger pool of knowledge, advancing wellness science for everyone, without revealing your individual identity. This commitment is particularly salient for those navigating the complexities of hormonal optimization protocols, where precise, longitudinal data carries significant personal weight.

What Does Data De-Identification Truly Entail?

Data de-identification represents a systematic approach to altering health information so it no longer directly or indirectly identifies an individual. This involves a meticulous review and modification of various data points. Direct identifiers, such as your name, social security number, or specific contact information, are obvious candidates for removal. Indirect identifiers, which might seem innocuous alone but could collectively pinpoint an individual when combined, also receive careful consideration.

For instance, a combination of your birthdate, ZIP code, and a specific diagnosis could, in some cases, uniquely identify you within a smaller dataset. Robust de-identification protocols aim to mitigate these risks by employing various techniques that transform or obscure such quasi-identifiers. This foundational step establishes the framework for ethical data utilization within the wellness ecosystem.

Intermediate Strategies for Data Verification

Moving beyond foundational concepts, a deeper engagement with a wellness vendor’s data de-identification claims requires a more analytical approach. For individuals deeply invested in their hormonal health and metabolic function, understanding the practical methods vendors employ, and the avenues for verifying those methods, becomes paramount. This involves scrutinizing the operational specifics and the governance structures that underpin their data handling assertions.

Examining Vendor De-Identification Methodologies

Wellness vendors typically employ several recognized methodologies to de-identify health data, each with its own strengths and limitations. A common standard in healthcare data protection is the Health Insurance Portability and Accountability Act (HIPAA) Safe Harbor method. This protocol dictates the removal of 18 specific categories of identifiers, transforming data into a state considered de-identified.

These identifiers encompass everything from names and geographic subdivisions smaller than a state to specific dates (excluding year), telephone numbers, and medical record numbers. Adherence to such a comprehensive checklist provides a baseline for data protection.

An alternative approach, the Expert Determination method, involves a qualified statistical expert applying generally accepted statistical and scientific principles to determine that the risk of re-identification is very small. This method offers greater flexibility for complex datasets, allowing for more nuanced data transformation while preserving a higher degree of utility for research.

Pseudonymization, another technique, replaces direct identifiers with artificial, reversible codes. This maintains the ability to link data points for longitudinal analysis while requiring an additional key to re-identify the individual, adding a layer of security.

Understanding a vendor’s specific de-identification methods, whether Safe Harbor or Expert Determination, offers clarity on their commitment to privacy.

For those monitoring their endocrine system, such as individuals undergoing Testosterone Replacement Therapy (TRT) or Growth Hormone Peptide Therapy, the granularity of data collected can be extensive. This includes weekly injection dosages, precise blood work results over time (e.g. total and free testosterone, estradiol, LH, FSH, IGF-1), and subjective symptom tracking. The robust application of these de-identification techniques ensures that such detailed, interconnected data contributes to advancing clinical understanding without revealing personal health journeys.

Evaluating Data Governance and Transparency

Verifying de-identification claims extends beyond understanding the technical methods; it requires an assessment of the vendor’s overall data governance framework. Transparency from the vendor regarding their data handling practices forms a critical element of this evaluation. Inquiring about their privacy policy, specifically the sections detailing data de-identification and sharing, provides valuable insights.

A vendor committed to responsible data stewardship will articulate their processes clearly, outlining how data from personalized wellness protocols, including details of hormonal optimization or peptide therapy, is transformed. They will also specify who has access to the de-identified data and for what purposes it is utilized, such as contributing to research on metabolic function or the efficacy of various peptide protocols.

Common De-Identification Techniques and Characteristics

| Technique | Description | Impact on Data Utility | Re-Identification Risk |

|---|---|---|---|

| Safe Harbor | Removes 18 specific HIPAA identifiers. | Moderate to High reduction. | Low. |

| Expert Determination | Statistical expert assesses and mitigates re-identification risk. | Customizable, can preserve high utility. | Low, based on expert assessment. |

| Pseudonymization | Replaces identifiers with reversible codes. | High preservation, requires key for re-linkage. | Moderate, if key is compromised. |

| Generalization | Replaces specific values with broader categories (e.g. age range instead of exact age). | Moderate reduction. | Low to Moderate. |

| Suppression | Removes entire data points or records with high re-identification risk. | High reduction for specific records. | Very Low for suppressed data. |

Academic Rigor in De-Identification Verification

For the discerning individual, verifying de-identification claims requires an understanding of the advanced statistical and computational methodologies that underpin truly robust data protection. This academic perspective delves into the quantitative measures and theoretical frameworks designed to minimize re-identification risk, particularly for highly granular biological datasets inherent in personalized wellness protocols. The intricate interplay of endocrine markers and metabolic profiles presents unique challenges in maintaining both data utility and absolute privacy.

Advanced Methodologies for Data Anonymization

The field of data privacy science has developed sophisticated anonymization models beyond simple identifier removal. Key among these are k-anonymity, l-diversity, and t-closeness. K-anonymity ensures that each record in a dataset is indistinguishable from at least (k-1) other records concerning a set of quasi-identifiers.

For instance, if k=5, an individual’s unique combination of age, sex, and ZIP code appears at least five times in the dataset, making it harder to isolate them. While foundational, k-anonymity alone does not prevent attribute disclosure, where sensitive information (e.g. a specific hormonal imbalance) can be inferred if all k individuals share it.

This limitation leads to l-diversity, which mandates that each group of k-anonymous records contains at least ‘l’ distinct sensitive values. For a dataset containing hormone levels, l-diversity would ensure that within any k-anonymous group, there is sufficient variation in, for example, testosterone or estradiol levels, preventing an attacker from inferring a specific value for an individual.

Building further, t-closeness addresses the issue where the distribution of sensitive attributes within a k-anonymous group might still be too close to the overall distribution, potentially revealing information. T-closeness requires that the distribution of a sensitive attribute in any k-anonymous group is “close” to the distribution of the attribute in the overall dataset, within a threshold ‘t’. These hierarchical models collectively strengthen the privacy guarantees for complex health data.

Sophisticated anonymization models like k-anonymity, l-diversity, and t-closeness offer robust protection against re-identification, crucial for sensitive biological data.

Differential privacy represents a more recent and powerful paradigm. It involves adding carefully calibrated noise to data or query results, ensuring that the presence or absence of any single individual’s data in the dataset does not significantly affect the outcome of an analysis.

This mathematical guarantee provides a strong defense against re-identification attacks, even for highly unique data points, making it highly relevant for sensitive endocrine system data where individual variations can be substantial. The challenge lies in balancing the privacy budget (the amount of noise added) with the utility of the data for precise clinical insights.

The Interconnectedness of Endocrine Data and Re-Identification Risk

The endocrine system’s profound interconnectedness means that a comprehensive wellness profile often includes a multitude of correlated markers. A patient undergoing a protocol involving Testosterone Cypionate and Gonadorelin, for example, will have data points spanning testosterone, estradiol, LH, FSH, and potentially other pituitary hormones.

These data points, when combined with metabolic markers like glucose, insulin, and lipid panels, create a highly dimensional and often unique biological signature. This intricate web of physiological data, while invaluable for personalized care, simultaneously amplifies the re-identification risk.

An attacker possessing even a few quasi-identifiers, combined with external public datasets, could potentially leverage the unique correlations within an individual’s hormonal and metabolic profile to infer identity. For instance, a rare combination of specific peptide therapy (e.g.

Tesamorelin, Hexarelin) usage, coupled with a distinct age range and geographic location, could narrow down the potential pool of individuals considerably. Verifying de-identification claims, therefore, requires assurance that the vendor understands these systemic risks and applies anonymization techniques that account for the synergistic identifying power of interconnected biological data.

Re-Identification Risks and Mitigation Strategies for Biological Data

Verifying de-identification claims in the context of personalized wellness protocols involves a critical examination of potential re-identification vectors and the vendor’s strategies to counteract them.

- Linkage Attacks ∞ Attackers combine de-identified data with external public datasets (e.g. voter registration, public health records) to re-identify individuals.

- Homogeneity Attacks ∞ Within a k-anonymous group, if all individuals share the same sensitive attribute (e.g. a specific rare hormonal condition), that attribute becomes known for the entire group.

- Background Knowledge Attacks ∞ An attacker uses prior knowledge about an individual (e.g. they know a person had a specific peptide therapy at a certain time) to re-identify them from a de-identified dataset.

- Differencing Attacks ∞ Combining multiple de-identified datasets can sometimes reveal sensitive information or allow re-identification.

Effective mitigation strategies include:

- Data Perturbation ∞ Adding controlled noise to numerical data (e.g. hormone levels) or altering categorical data to obscure exact values while preserving statistical properties.

- Synthetic Data Generation ∞ Creating entirely new datasets that statistically resemble the original but contain no real individual’s data, offering strong privacy guarantees.

- Secure Multi-Party Computation (SMC) ∞ Allowing multiple parties to jointly compute a function over their inputs while keeping those inputs private.

- Regular Privacy Audits ∞ Independent third-party assessments of de-identification processes and their effectiveness against re-identification attempts.

References

- Gostin, Lawrence O. and James G. Hodge Jr. “Personal privacy and common goods ∞ A framework for balancing under the HIPAA Privacy Rule.” American Journal of Public Health 92.10 (2002) ∞ 1605-1610.

- El Emam, Khaled, et al. “A systematic review of re-identification attacks on health data.” Journal of the American Medical Informatics Association 21.6 (2014) ∞ 1016-1022.

- Sweeny, Latanya. “k-anonymity ∞ A model for protecting privacy.” International Journal on Uncertainty, Fuzziness and Knowledge-Based Systems 10.05 (2002) ∞ 557-570.

- Machanavajjhala, Ashwin, et al. “l-diversity ∞ Privacy beyond k-anonymity.” ACM Transactions on Knowledge Discovery from Data (TKDD) 1.1 (2007) ∞ 3-es.

- Li, Ning, Tiancheng Li, and Suresh Venkatasubramanian. “t-Closeness ∞ Privacy beyond l-diversity and k-anonymity.” 2007 IEEE 23rd International Conference on Data Engineering. IEEE, 2007.

- Dwork, Cynthia. “Differential privacy.” International Colloquium on Automata, Languages, and Programming. Springer, Berlin, Heidelberg, 2008.

- Ohm, Paul. “Broken promises of privacy ∞ Responding to the surreptitious re-identification of anonymized data.” UCLA Law Review 57 (2010) ∞ 1701.

- Federal Register. “Standards for Privacy of Individually Identifiable Health Information; Final Rule.” Department of Health and Human Services 65.250 (2000) ∞ 82462-82829.

- Aggarwal, Charu C. and Philip S. Yu. “Privacy-preserving data mining ∞ a survey.” ACM Computing Surveys (CSUR) 43.4 (2011) ∞ 1-53.

- Beaulieu-Jones, Brendon K. and Isaac S. Kohane. “Feasibility of a learning healthcare system ∞ a systematic review of the literature.” BMC Medical Informatics and Decision Making 16.1 (2016) ∞ 1-13.

Reflection on Your Biological Blueprint

The journey to understanding how a wellness vendor manages your data is a testament to your proactive engagement with your health. The knowledge gained here about de-identification and anonymization represents more than technical specifications; it signifies a deeper awareness of the ecosystem surrounding your personal biological information.

This awareness empowers you to ask incisive questions, ensuring that the trust you place in these services aligns with rigorous standards of data stewardship. Your vitality, your function, and your peace of mind are inextricably linked to the careful handling of your unique biological blueprint. This understanding forms a vital component of your ongoing pursuit of optimized well-being, a personal path requiring thoughtful consideration at every juncture.