Fundamentals

Your journey toward understanding the intricate systems of your own body often begins with a feeling. It is a subtle shift in energy, a change in sleep patterns, or a new difficulty in achieving goals that were once routine.

This lived experience, this deeply personal sense that your internal calibration is off, is the most valid starting point for any health inquiry. When you seek answers, you enter a world of clinical science, a world filled with powerful tools for restoration, including the rapidly advancing field of peptide therapies.

These remarkable molecules, short chains of amino acids, act as precise messengers, speaking the body’s own chemical language to encourage healing, rebalance systems, and optimize function. You may hear about protocols that can sharpen cognition, accelerate tissue repair, or restore youthful hormonal signaling, and a sense of hope begins to build. You are ready to reclaim your vitality.

This personal desire for wellness, however, connects to a much larger, global challenge. Bringing a new peptide therapy from a laboratory concept to a clinically validated protocol available to you is an incredibly complex and resource-intensive process. It involves rigorous testing through clinical trials, where researchers meticulously document a therapy’s effects on human participants.

These trials happen in different hospitals, in different countries, run by different teams. Each team gathers a mountain of data points for every single participant ∞ baseline health markers, dosage information, injection schedules, blood test results, subjective feedback on symptoms, and any observed side effects. The goal is to build an undeniable case for the therapy’s safety and effectiveness. This process is the bedrock of modern medicine, ensuring that any protocol you undertake is built on a foundation of evidence.

The Language of Discovery

The core of the challenge resides in the language of this collected data. At present, research teams across the world often document their findings using their own unique methods, software, and terminology. A research center in Germany might record patient outcomes in one format, while a trial in Japan uses another, and a university study in the United States uses a third.

They are all speaking about the same biological processes, the same peptide, and the same human desire for healing, yet they are speaking in different data dialects. This fragmentation creates immense friction. Combining the results of these separate trials to get a clear, big-picture view becomes a monumental task of translation and interpretation.

It slows down the pace of discovery, making it harder to confirm a therapy’s benefits, identify potential risks for specific populations, or refine protocols for optimal results.

Imagine trying to assemble a single, coherent story from chapters written in different languages by authors who never consulted one another. This is the reality of medical research today. The solution lies in creating a universal language, a standardized data format that every research team, in every country, can use.

This shared framework ensures that when one scientist records a specific outcome, another scientist halfway across the world can understand it instantly and accurately. It allows for the seamless combination of data from multiple small studies into a single, massive, and statistically powerful dataset. This process of data aggregation is what allows science to move from promising anecdotes to definitive conclusions, accelerating the journey of a peptide therapy from the lab to the clinic, and ultimately, to you.

What Is a Standardized Data Format?

A standardized data format is a globally agreed-upon structure for recording and exchanging information. In the context of peptide therapy trials, it would mean defining exactly how to label every single piece of data. For instance, instead of one study using “Testosterone Level” and another using “T-level (ng/dL),” the standard would mandate a single, precise term.

It would specify the units of measurement, the way patient demographics are recorded, and how adverse events are categorized. This creates a system where data becomes interoperable, meaning it can be easily shared and understood by different computer systems and research platforms without manual conversion or the risk of misinterpretation.

Adopting a universal data language allows researchers worldwide to combine their findings, transforming fragmented information into powerful, conclusive evidence.

This concept is the key to unlocking the full potential of peptide science. By enabling researchers to pool their results, we can get faster, more reliable answers to critical questions. Is a certain dose of Sermorelin more effective for fat loss? What is the long-term safety profile of Ipamorelin/CJC-1295 across a diverse population?

Are there subtle side effects that only become apparent when you analyze data from thousands of patients instead of just a few hundred? Answering these questions swiftly and accurately is the promise of standardized data. It is about building a more efficient, collaborative, and powerful global research engine, driven by the shared goal of translating biological knowledge into human vitality.

This effort directly impacts your personal health journey. The faster researchers can validate a new protocol, the sooner it becomes a trusted tool for clinicians to help patients like you. It means that the therapeutic options available to you are backed by a deeper, more robust body of evidence drawn from a global population.

Your individual quest for wellness is therefore intrinsically linked to this global need for a common data language. The pursuit of personal optimization and the advancement of clinical science are two aspects of the same endeavor, and standardized data formats are the bridge that connects them.

Intermediate

To appreciate the profound impact of data standardization on peptide therapy, we must move from the conceptual to the practical. The architecture of this universal language for health research is already being built. One of the most significant frameworks is HL7 Fast Healthcare Interoperability Resources, known as FHIR (pronounced “fire”).

FHIR is a standard developed by the organization Health Level Seven International, designed specifically to facilitate the exchange of healthcare information electronically. It uses modern web-based technologies to define a set of modular components called “Resources.” Each Resource represents a discrete, granular piece of clinical or administrative information.

Think of FHIR Resources as standardized digital building blocks. There is a ‘Patient’ Resource that holds demographic information in a consistent structure. There is an ‘Observation’ Resource for lab results, vital signs, and other measurements. A ‘MedicationAdministration’ Resource documents the specifics of a dose given to a patient.

A ‘Condition’ Resource captures diagnoses. By defining these blocks with rigid, predictable structures, FHIR ensures that any system designed to use them can speak the same language. It allows for the creation of applications that can pull and combine data from different sources, confident that the information will be consistent and understandable. The goal of FHIR is to address the most common 80% of data exchange needs, creating a robust foundation that can be extended for more specialized cases.

How Would FHIR Apply to a Peptide Therapy Trial?

Let’s consider a hypothetical international clinical trial for a peptide protocol aimed at improving metabolic health, such as Tesamorelin. This peptide is known to stimulate growth hormone release and has shown efficacy in reducing visceral adipose tissue. In our trial, we have research sites in North America, Europe, and Asia.

Without a standard, each site might develop its own database. The American site might record the dose as “1mg/day,” the German site as “1000mcg QD,” and the Japanese site might have a localized system with different field names entirely.

When the time comes to analyze the global results, a data science team must undertake a painstaking process of “mapping” and “harmonizing” this data, trying to ensure that “1mg/day” and “1000mcg QD” are correctly interpreted as the same thing. This process is slow, expensive, and prone to error.

With a FHIR-based approach, every site would use the same standardized Resources. The protocol would be captured using a ‘PlanDefinition’ Resource. Each participant would have a ‘Patient’ Resource. When a dose of Tesamorelin is administered, it is recorded as a ‘MedicationAdministration’ Resource, which has specific, required fields for the medication name (using a standardized code), the dosage amount, and the dosage unit (e.g.

‘mg’). The patient’s subsequent blood work showing changes in IGF-1 levels would be captured in a series of ‘Observation’ Resources, each linked back to the ‘Patient’ Resource. Any reported side effects, like injection site reactions or fluid retention, would be documented in an ‘AdverseEvent’ Resource.

Standardized formats like FHIR act as a universal translator, ensuring that data from a clinical trial in one country is immediately and perfectly understood by researchers in another.

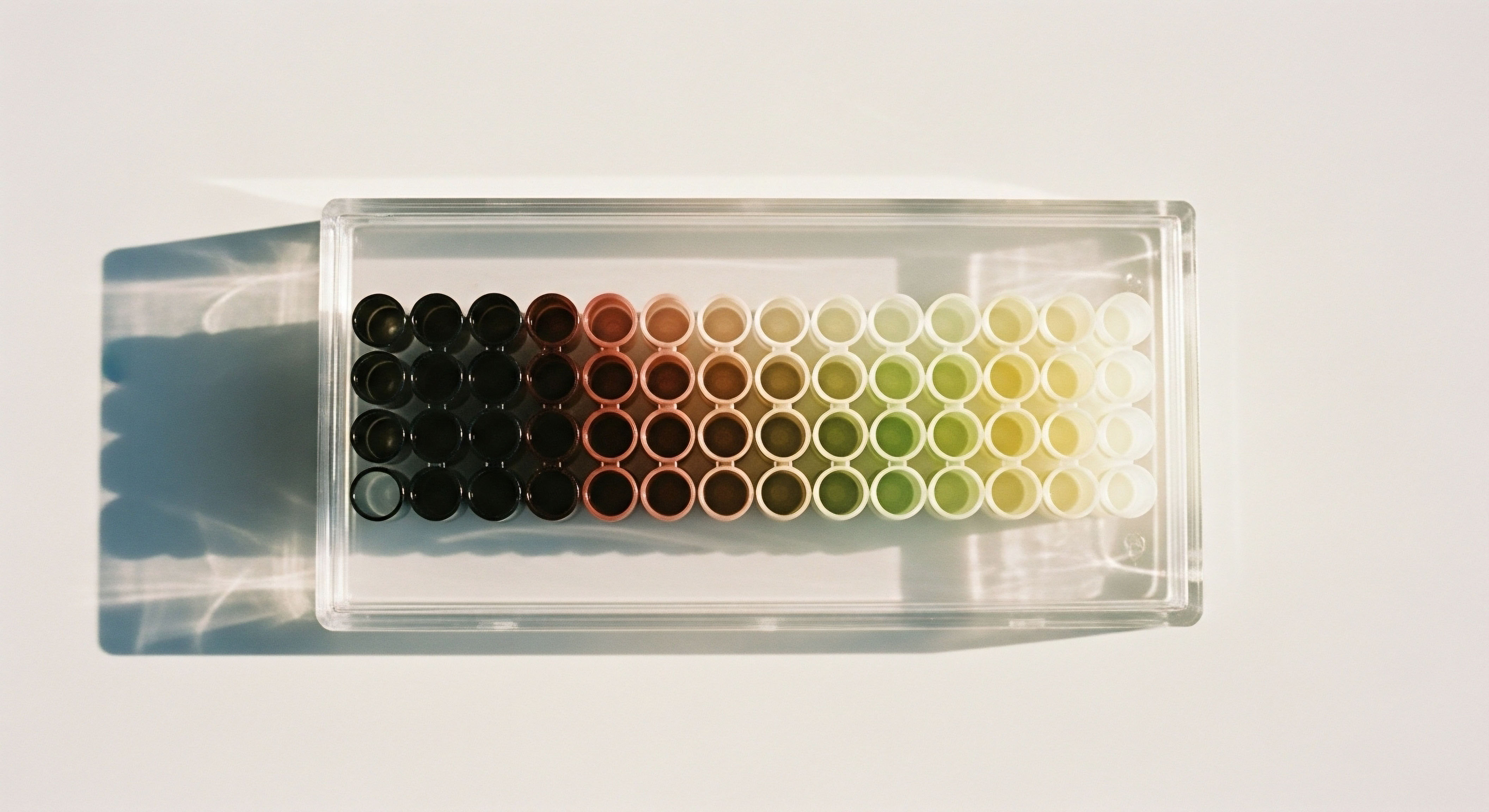

This structured approach transforms the research landscape. Data from all three continents could be pooled into a central database in near real-time. Analysts could immediately begin looking for patterns without the arduous data-cleaning step. They could more easily compare outcomes between different demographic groups, identify subtle safety signals that only appear at scale, and accelerate the entire process of proving the therapy’s value. The table below illustrates this shift from a fragmented to a unified data ecosystem.

| Data Point | Fragmented (Pre-Standardization) Approach | Standardized (FHIR) Approach |

|---|---|---|

| Patient Identifier | Site-specific ID; e.g. “Patient 12-A” at one site, “DE-0045” at another. | A single, globally unique identifier within a ‘Patient’ Resource. |

| Peptide Administered | Free-text entries ∞ “Ipamorelin/CJC”, “CJC 1295 w/ Ipa”, “Sermorelin”. | ‘Medication’ Resource using a standard code (e.g. RxNorm) for the specific peptide combination. |

| Dosage | Inconsistent formats ∞ “100mcg”, “0.1mg”, “10 units”. | ‘MedicationAdministration’ Resource with distinct fields for value (e.g. 100) and unit (e.g. ‘mcg’). |

| Lab Result (IGF-1) | Varying names and units ∞ “IGF-1”, “Somatomedin C (ng/mL)”. | ‘Observation’ Resource using a universal LOINC code for IGF-1 and specified units. |

| Adverse Event | Descriptive text ∞ “Redness at injection spot”, “Patient felt puffy”. | ‘AdverseEvent’ Resource with coded severity and standardized terminology (e.g. MedDRA). |

Strengthening Protocols for Hormonal Health

This same logic applies directly to the established hormonal optimization protocols. Consider Testosterone Replacement Therapy (TRT) for men. A standard protocol might involve weekly injections of Testosterone Cypionate, combined with Gonadorelin to maintain testicular function and an aromatase inhibitor like Anastrozole to control estrogen.

The effectiveness and safety of this delicate balance depend on tracking multiple data points ∞ testosterone levels (total and free), estradiol (E2), luteinizing hormone (LH), follicle-stimulating hormone (FSH), and hematocrit, alongside subjective patient-reported outcomes on mood, libido, and energy.

Standardizing the collection of this data across thousands of patients would be transformative. It would allow for the creation of massive, real-world datasets that could answer critical questions with far greater statistical power. For example, what is the precise relationship between Anastrozole dosage and long-term bone mineral density in men on TRT?

At what hematocrit level does the risk of thromboembolic events begin to significantly increase? By pooling harmonized data from clinics worldwide, we could refine these protocols with incredible precision, moving closer to truly personalized biochemical recalibration.

The same holds true for female hormone protocols, which can be even more complex, involving careful balancing of testosterone, progesterone, and estrogens depending on menopausal status. Standardized data would help elucidate the long-term benefits and risks of adding low-dose testosterone to a woman’s regimen or optimizing progesterone timing.

For Growth Hormone Peptide Therapies, it would allow for the direct comparison of different secretagogues like Sermorelin and Ipamorelin, helping to clarify which is best suited for specific goals like fat loss versus tissue repair. The lack of standardized protocols is a frequently cited limitation in the current clinical application of these peptides. A common data format is the foundational step required to build those evidence-based, best-practice protocols.

- Data Aggregation ∞ Standardized formats permit the seamless merging of data from multinational trials, dramatically increasing the statistical power of the analysis.

- Efficiency and Speed ∞ By eliminating the need for manual data harmonization, research timelines are shortened, and therapies can be validated and brought to the clinic faster.

- Enhanced Safety Monitoring ∞ Large, pooled datasets allow for the detection of rare adverse events and long-term safety signals that are invisible in smaller, isolated studies. This is a critical aspect of global pharmacovigilance.

- Foundation for Advanced Analysis ∞ A clean, standardized dataset is the prerequisite for applying advanced analytical techniques like machine learning to predict patient responses or optimize dosing on an individual level.

Academic

The acceleration of peptide therapy development through data standardization extends far beyond the harmonization of clinical endpoints and administrative data. The true frontier of personalized medicine lies in the integration of multi-omics data into clinical trial frameworks. This approach analyzes the complete set of a person’s biological molecules to create a high-resolution map of their unique physiology.

By understanding an individual’s specific genomic blueprint, proteomic expression, and metabolic state, we can begin to predict their precise response to a given therapeutic peptide. However, the sheer volume and complexity of this data make standardization an absolute prerequisite for meaningful global collaboration.

A multi-omics approach provides a systems-biology view of health, acknowledging that a peptide’s effect is modulated by a complex network of biological factors. Integrating these deep datasets with standardized clinical outcome data allows researchers to move from asking “Does this peptide work?” to asking “For whom does this peptide work, and why?” This transition is the core of precision medicine.

Without common data formats for both the ‘omics’ data and the clinical data, any attempt to integrate them on a global scale would be futile. It would be like trying to overlay maps drawn at different scales, with different symbols, and in different languages. Standardization creates the common grid upon which all these data layers can be accurately placed.

Integrating the Multi-Omics Layers

Each ‘omic’ layer provides a different and vital window into a patient’s biological state. For a peptide therapy trial, standardizing the data generated from each of these layers is crucial for building predictive models of efficacy and safety.

How Could Multi-Omics Data Be Standardized in Peptide Trials?

The integration of multi-omics data requires a new level of standardization. It involves not just agreeing on formats for clinical observations, but also for the massive datasets generated by sequencing machines and mass spectrometers. This includes standardizing file formats (e.g.

FASTQ for raw sequence data, VCF for genetic variants), metadata about the experimental conditions, and the bioinformatic pipelines used for initial data processing. The goal is to ensure that a genomic dataset from a lab in Seoul is directly comparable to one from a lab in San Francisco, allowing researchers to find meaningful biological signals in the noise.

- Genomics ∞ This involves sequencing a patient’s DNA to identify genetic variations (polymorphisms) that might influence their response to a peptide. For example, a variation in the gene for the Growth Hormone Releasing Hormone (GHRH) receptor could dictate how well a patient responds to Sermorelin. Standardizing how these variations are identified and reported is essential for discovering such connections.

- Transcriptomics ∞ This measures which genes are being actively expressed and to what degree. It provides a dynamic snapshot of cellular activity. In a peptide trial, transcriptomics could show how a peptide like BPC-157 alters the gene expression patterns in gut tissue, providing a mechanistic insight into its healing properties.

- Proteomics ∞ This is the large-scale study of proteins, the body’s functional workhorses. Proteomics can directly measure the levels of the target receptor for a peptide, or it can track the downstream cascade of signaling proteins that are activated after the peptide binds. This can provide a direct measure of a drug’s pharmacodynamic effect at the molecular level.

- Metabolomics ∞ This analyzes the profile of small molecules (metabolites) in a biological sample, such as blood or urine. A person’s metabolic signature provides a real-time readout of their physiological state. In a trial for a fat-loss peptide, metabolomics could track changes in fatty acids and ketones, offering a highly sensitive measure of the therapy’s metabolic impact.

The Critical Challenge of Global Pharmacovigilance

The need for data standardization is perhaps most acute in the domain of pharmacovigilance, the science of monitoring the safety of medicines after they have been approved. This is particularly true for biologics like peptides. While many peptides have a favorable safety profile, some can elicit an immune response, leading to the production of anti-drug antibodies (ADAs). This immunogenicity can reduce the efficacy of the therapy or, in rare cases, cause adverse reactions.

Detecting these safety signals requires robust, long-term surveillance across a large and diverse population. This is a global undertaking, but it is currently hampered by heterogeneous national regulations and reporting formats. A safety signal might be missed because data from different countries cannot be effectively combined and analyzed.

Furthermore, for peptides, traceability is a significant issue. Subtle differences in the manufacturing process between two different suppliers of the same peptide could potentially lead to different immunogenicity profiles. Without a standardized system for tracking the specific product, batch, and manufacturer associated with an adverse event report, identifying the source of a problem becomes nearly impossible.

For biologic therapies like peptides, standardized safety data is not just a matter of efficiency; it is a fundamental requirement for ensuring patient safety on a global scale.

A standardized pharmacovigilance framework, built on a platform like FHIR, would require distinct naming and coding for each specific peptide product. It would mandate the capture of batch numbers within the ‘AdverseEvent’ Resource. This would create a global system capable of rapidly detecting and tracing safety issues back to their source, a critical capability as more peptide therapies come to market. This unified approach is essential for building and maintaining public trust in these powerful therapeutic tools.

| Omics Layer | Key Data Points for Standardization | Relevance to Peptide Therapy |

|---|---|---|

| Genomics | Gene variants (SNPs), copy number variations, HLA type. Standardized variant call format (VCF). | Predicts individual response based on receptor genetics or metabolic enzyme variants. Identifies genetic predisposition to immunogenicity. |

| Transcriptomics | Gene expression levels (e.g. TPM/FPKM), differential expression analysis results. Standardized RNA-Seq processing pipelines. | Measures the direct molecular effect of a peptide on cellular gene activity, elucidating its mechanism of action. |

| Proteomics | Protein abundance levels, post-translational modifications, protein-protein interactions. Standardized mass spectrometry data formats (e.g. mzML). | Confirms target engagement (e.g. receptor activation) and maps the downstream signaling cascade. Can directly measure anti-drug antibody levels. |

| Metabolomics | Metabolite concentrations, pathway analysis results. Standardized reporting of identified compounds and their quantities. | Provides a real-time signature of the physiological effect of the therapy (e.g. changes in lipid metabolism, inflammation markers). |

Ultimately, the convergence of multi-omics technology and standardized data formats represents a new paradigm for therapeutic development. It allows science to move beyond population averages and into the realm of true personalization. For peptide therapies, which are designed to interact with the body’s most sensitive and nuanced signaling pathways, this level of precision is paramount.

Building the international infrastructure to support this requires a collaborative commitment to a common data language. It is a complex undertaking, involving technical, regulatory, and logistical challenges. The potential reward, a future where therapeutic protocols are precisely tailored to an individual’s unique biology, justifies the effort.

References

- Lau, Jos. L. and Bernd Meibohm. “Pharmacokinetics and pharmacokinetic ∞ pharmacodynamic correlations of therapeutic peptides.” Clinical Pharmacokinetics, vol. 52, no. 8, 2013, pp. 615-27.

- Torres, M.J. et al. “Beyond Efficacy ∞ Ensuring Safety in Peptide Therapeutics through Immunogenicity Assessment.” Pharmaceuticals, vol. 17, no. 5, 2024, p. 556.

- Saripalle, S.K. et al. “Peptide-based therapeutics ∞ quality specifications, regulatory considerations, and prospects.” Drug Delivery, vol. 30, no. 1, 2023.

- Benson, H.A.E. et al. “Peptide Therapy ∞ The Future of Targeted Treatment?” News-Medical.net, 2025.

- Sarwar, M.T. et al. “The Fast Health Interoperability Resources (FHIR) Standard ∞ Systematic Literature Review of Implementations, Applications, Challenges and Opportunities.” JMIR Medical Informatics, vol. 8, no. 7, 2020, e18349.

- “Use of FHIR in Clinical Research ∞ From Electronic Medical Records to Analysis.” CDISC, 2018.

- Hölter, T. et al. “HL7 FHIR in Health Research ∞ A FHIR Specification for Metadata in Clinical, Epidemiological, and Public Health Studies.” ResearchGate, 2024.

- “Challenges and Opportunities in Global Pharmacovigilance Systems.” Walsh Medical Media, 2024.

- “Pharmacovigilance Challenges with Biologics and Biosimilars.” Cloudbyz, 2025.

- Al-Jumeili, M. et al. “Revolutionizing Personalized Medicine ∞ Synergy with Multi-Omics Data Generation, Main Hurdles, and Future Perspectives.” International Journal of Molecular Sciences, vol. 25, no. 8, 2024, p. 4531.

- “Integration of multi-omics with clinical trials data.” Genestack, 2023.

Reflection

Your Biology Is Your Story

The information presented here, from the function of a single peptide to the architecture of global data networks, ultimately points back to you. Your body is a dynamic system, a story being written in a biological language of hormones, neurotransmitters, and signaling molecules.

The symptoms you feel are your body’s way of communicating chapters of that story. The science of personalized medicine is, at its heart, an effort to learn to read that language with perfect fluency. The protocols and therapies discussed are tools to help you become an active author of your own story of health and vitality.

Consider the data of your own life. The daily fluctuations in your energy, the quality of your sleep, the food you eat, your response to stress, the results of your blood tests. Each point is a piece of information. When viewed in isolation, they may seem random.

When seen together, patterns begin to form. This is precisely the logic that drives the push for data standardization on a global scale. It is about seeing the larger patterns in the human story of health. As science develops more powerful tools to read this data, you are empowered to understand your own unique biology with greater clarity.

This knowledge is the first and most critical step on any path to reclaiming your optimal function. What is your biology telling you today?