Fundamentals

You consent to share your data with a wellness application, believing your contribution to be anonymous. This action comes from a place of wanting to understand your own body better and perhaps contribute to a larger scientific understanding of health.

Your lived experience, the daily fluctuations in energy, sleep quality, and physical performance, feels like a stream of personal information you are directing toward a greater good. The core premise is that by removing your name and direct identifiers, your data dissolves into a vast, impersonal ocean of information.

This premise deserves a closer, more scientific examination. The truth is that the very data points you share ∞ your heart rate’s rhythm, your sleep’s architecture, the subtle shifts in your metabolic function ∞ tell a story. This story is written in a biological language so specific and so deeply interconnected that it functions as a unique signature. Your biology, in its magnificent complexity, may be its own identifier.

The concept of “anonymization” in the context of health data is itself a complex physiological process. At a basic level, it involves stripping a dataset of explicit personal identifiers. This includes your name, address, and social security number.

The resulting data is considered “de-identified.” Many data privacy regulations, such as the US Health Insurance Portability and Accountability Act (HIPAA), define standards for this process. The goal is to create a dataset where, in theory, no single entry can be traced back to a specific person.

This is often achieved through methods like generalization, where a specific data point like an exact age is replaced with a broader category, or suppression, where a piece of information is removed entirely. These techniques aim to break the direct link between the data and your identity, making the information safe for research or analysis.

However, your body’s internal systems create a narrative that is far more detailed than simple demographic data. Consider the endocrine system, the body’s intricate network of glands and hormones. It operates on a series of feedback loops, a constant conversation between your brain, your organs, and your cells.

The Hypothalamic-Pituitary-Gonadal (HPG) axis, for example, governs reproductive function and steroid hormone production, including testosterone and estrogen. Its function is reflected in everything from a woman’s menstrual cycle, tracked with precision by many wellness apps, to a man’s energy levels and response to exercise. These are not isolated data points.

They are chapters in a continuous biological story. A wellness app tracking your sleep patterns is also inadvertently tracking the nightly ebb and flow of cortisol and growth hormone. An app monitoring your heart rate variability is capturing a direct output of your autonomic nervous system, which is itself modulated by your hormonal state.

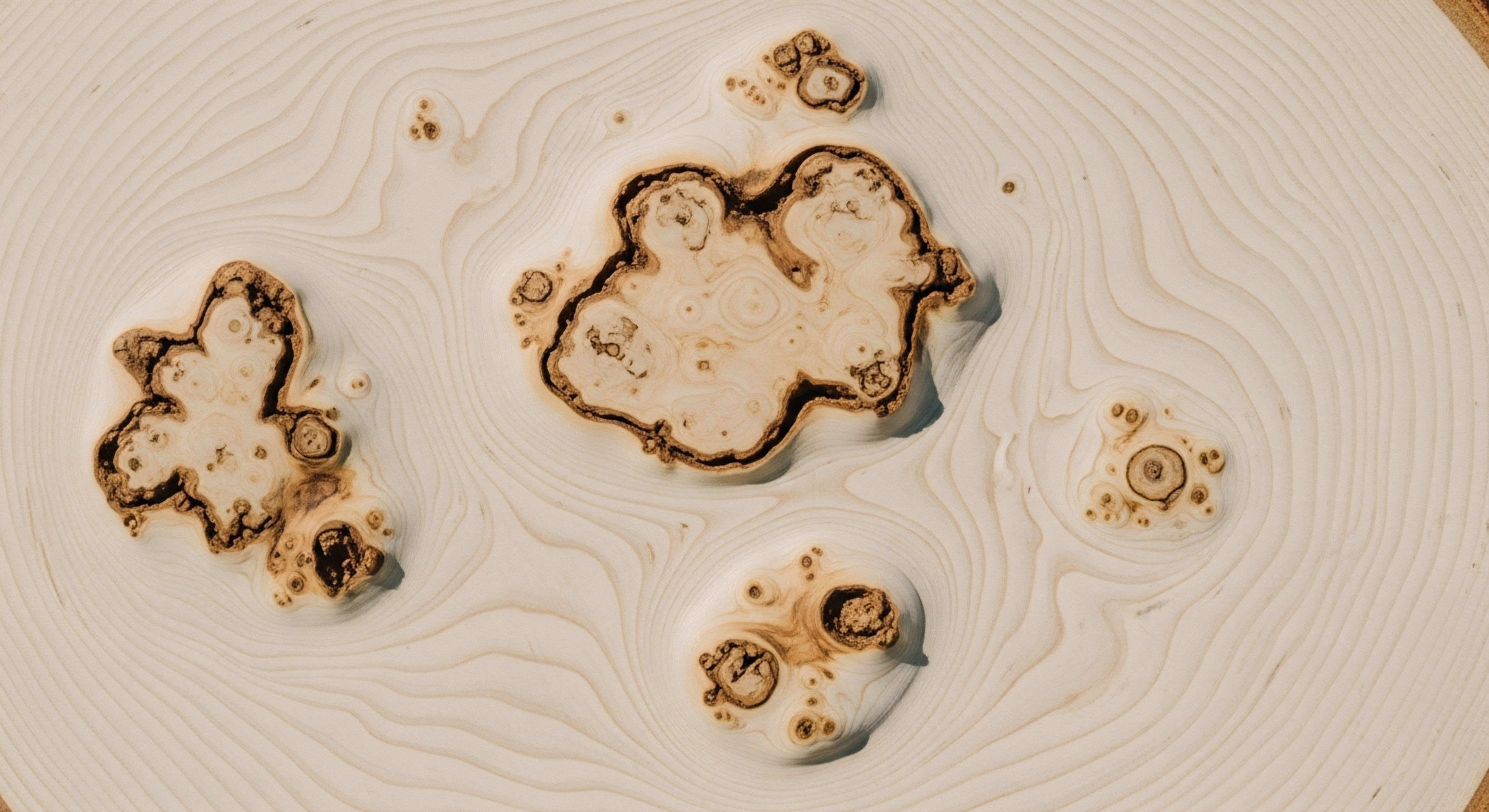

This rich, interconnected data stream forms a physiological fingerprint. Each person’s combination of these patterns, the unique way their systems interact and respond, creates a signature that is exceptionally difficult to erase, even after names and addresses are removed.

The Allure of Your Biological Story

The reason this data is so sought after is precisely because of its richness and specificity. It holds the key to developing the very personalized wellness protocols many users seek. Researchers and technology companies analyze this information to find patterns, to understand how different bodies respond to different inputs, and to build algorithms that can predict health outcomes or suggest interventions.

For instance, by analyzing thousands of users’ glucose and activity data, an app can refine its recommendations for stabilizing blood sugar. By examining sleep data correlated with user-reported stress levels, developers can improve features designed to promote recovery and resilience. This pursuit of personalization is a powerful engine for data collection.

The objective is to move beyond generic advice and offer guidance tailored to an individual’s unique physiology. The paradox is that the more personalized the potential benefit, the more personal and potentially identifiable the data required to achieve it becomes.

Your physiological data tells a story so unique that it may act as its own form of identification.

The distinction between anonymized and pseudonymized data is a critical one in this context. While anonymization seeks to permanently sever the link to an individual, pseudonymization replaces direct identifiers with a consistent but artificial one, a “pseudonym.” This allows a user’s data to be linked over time without revealing their real-world identity.

This is common in wellness apps where you have a user profile that tracks your progress. While your name might be hidden from researchers, your data is still aggregated under a single, unique ID. This longitudinal data is immensely valuable, as it shows trends and changes over time. Yet, it also preserves the integrity of your unique biological signature, making the complete dataset a more coherent and thus more potentially re-identifiable target.

What Makes Your Hormonal Data a Fingerprint?

To appreciate the identifying power of your health data, one must understand the interconnectedness of the endocrine system. Your hormonal milieu is a dynamic system, a symphony of chemical messengers that dictates function across your entire body. It is not a collection of independent variables. It is a web of cause and effect.

- The HPG Axis in Action ∞ For a woman, the monthly cycle of estrogen and progesterone creates a predictable, yet highly individual, pattern of physiological changes. These are reflected in basal body temperature, heart rate variability, sleep architecture, and even mood and energy levels logged in an app. For a man undergoing Testosterone Replacement Therapy (TRT), the protocol of weekly injections creates a distinct sawtooth pattern in his hormonal levels, which in turn influences his metabolic rate, recovery, and other trackable metrics.

- The HPA Axis and Stress ∞ The Hypothalamic-Pituitary-Adrenal (HPA) axis governs your stress response via the hormone cortisol. An app that tracks your sleep, readiness score, or perceived stress is capturing data points that are direct reflections of your HPA axis function. The way your body responds to a workout, a stressful day, or a poor night’s sleep is a unique characteristic of your HPA tone.

- Metabolic Signatures ∞ Continuous glucose monitors (CGMs), once a tool for diabetics, are now a popular wellness device. The data they generate is a real-time graph of your body’s response to food, exercise, stress, and sleep. Your specific glucose curve after a particular meal, combined with your heart rate response during a workout, adds another layer of high-fidelity detail to your biological signature.

When these streams of data are combined, they do not simply add up; they multiply in their descriptive power. The pattern of your menstrual cycle, overlaid with your unique glucose response to oatmeal, combined with your specific heart rate variability during REM sleep, creates a multi-dimensional data portrait.

It is this portrait, this intricate and specific biological story, that challenges the very concept of anonymity. The data is valuable because it is personal. And because it is personal, it risks remaining identifiable.

Intermediate

Understanding that your physiological data creates a unique signature is the first step. The next is to comprehend the specific mechanisms through which this “anonymized” signature can be re-linked to your actual identity. This process is not theoretical; it is a practical application of data science and logical deduction.

The vulnerabilities lie within the anonymization techniques themselves and the ever-expanding universe of publicly available information. An attacker does not need to guess your identity randomly from a pool of billions. They use your own data as a key to unlock other, more explicit, sources of information about you.

The re-identification process is a form of triangulation, using the unique patterns in your wellness data to find a matching signal in a different dataset that still contains your name.

The most common method is known as a linkage attack. Imagine a wellness app has published a de-identified dataset for research. This dataset contains detailed second-by-second accelerometer data, heart rate, and sleep stages for thousands of users. Your name is gone, but your data is there.

Now, consider another dataset, one that is publicly or semi-publicly available. This could be your Strava or Garmin Connect profile where you log your runs, complete with GPS maps. It could be your public social media posts where you mention completing a 10k race on a specific date.

An attacker can take the unique pattern of your heart rate and movement from the “anonymous” wellness dataset and correlate it with the GPS and time data from your public running profile. The two patterns will match. Once the match is made, the attacker has linked the anonymous data, which may contain sensitive health insights, to your public profile, which contains your name and photo. The bridge is built, and your anonymity is compromised.

The Fragility of Quasi-Identifiers

This linkage is often facilitated by “quasi-identifiers.” These are pieces of information that are not, by themselves, unique identifiers but can become so when combined. HIPAA’s Safe Harbor method of de-identification allows for the retention of certain data points, like state, partial zip codes, and dates of birth generalized to a year.

A landmark study once demonstrated that 87% of the US population could be uniquely identified using just three of these quasi-identifiers ∞ a 5-digit ZIP code, gender, and full date of birth. While modern standards are more stringent, the principle remains. A study in 2019 showed that 99.98% of Americans could be correctly re-identified in any dataset using just 15 demographic attributes.

When you add the rich, high-fidelity time-series data from a wellness app to this mix, the potential for re-identification increases substantially.

The very nature of time-series data from wearables makes it a powerful quasi-identifier. Your gait, the way you walk, is captured by the accelerometer in your phone or watch and is nearly as unique as a fingerprint. The pattern of your heart rate recovery after identical workouts over several months is a highly personal metric.

These are not static numbers; they are dynamic signatures. A 2021 systematic review of studies on wearable data found that correct identification rates were typically between 86-100%, indicating a very high risk of re-identification. In some cases, as little as one to five minutes of sensor data was required to identify an individual. This shows that the raw sensor data itself, even without explicit demographic information, can serve as a potent key for linkage attacks.

A linkage attack can cross-reference “anonymous” wellness data with public information, using your unique biological patterns as the key to unlock your identity.

Let’s consider the specific clinical protocols that inform the data you might generate. If you are a woman using an app to track your perimenopausal symptoms while on low-dose testosterone therapy, your data stream is exceptionally unique. It includes specific cycle data, user-logged symptoms like hot flashes or mood changes, and physiological responses to the hormonal therapy.

If you are a man on a TRT protocol involving weekly Testosterone Cypionate injections and a Gonadorelin regimen to maintain natural function, your body’s response creates a distinct weekly metabolic and hormonal signature. This signature, reflected in your sleep data, recovery scores, and workout performance, is far from generic.

An attacker with even a sliver of background knowledge ∞ perhaps knowing you are on a wellness protocol ∞ can use this highly specific pattern to filter and identify you from a sea of “anonymous” users.

How Can Anonymized Data Be Traced?

The technical methods used to “anonymize” data have inherent limitations. Understanding these limitations is key to appreciating the true level of risk. The table below outlines common de-identification techniques and their associated vulnerabilities to re-identification attacks.

| De-Identification Technique | Description | Vulnerability |

|---|---|---|

| Suppression |

Entire data fields or records are removed from the dataset. For example, removing the ‘zip code’ column entirely. |

Can severely reduce the utility of the data for analysis. If not applied to enough fields, remaining quasi-identifiers can still be used for linkage. |

| Generalization |

Specific data points are replaced with broader categories. For instance, an age of ’42’ becomes ’40-45′. |

Reduces data precision. An attacker can still perform linkage attacks if the generalized categories, when combined, are sufficiently unique. |

| Pseudonymization |

Direct identifiers are replaced with a consistent but fake identifier (a pseudonym). The same user has the same pseudonym across the dataset. |

The link between the user and their data is preserved, which is useful for longitudinal analysis but also preserves the unique biological signature, making it a prime target for linkage. |

| Perturbation |

Slight modifications or noise are added to the data points to reduce their precision while maintaining statistical properties. |

It can be difficult to add enough noise to guarantee privacy without destroying the data’s value for subtle analysis, like tracking hormonal fluctuations. |

The Role of High-Risk Data Points

Certain types of data collected by wellness apps carry a higher intrinsic risk of re-identification. Their specificity and longitudinal nature make them powerful signatures. Recognizing these high-risk data streams is essential for a realistic assessment of your privacy.

- Continuous Glucose Monitoring (CGM) Data ∞ A CGM provides a near-continuous stream of your blood glucose levels. Your specific metabolic response to meals, exercise, and stress is a high-fidelity signature that is deeply personal.

- Detailed Menstrual Cycle Data ∞ Tracking cycle length, symptoms, and basal body temperature creates a unique hormonal and physiological timeline specific to an individual, especially when combined with other metrics.

- Heart Rate Variability (HRV) ∞ While HRV is a common metric, your specific baseline, your response to stressors, and your overnight HRV pattern form a distinctive profile that reflects your autonomic nervous system’s unique tone.

- GPS-Tagged Activity Data ∞ The routes you run or walk, the times you are active, and the locations you frequent are incredibly powerful identifiers. Linking this to any other piece of data almost guarantees re-identification.

- Genomic Information ∞ Some wellness services offer genetic testing. Even partial genomic data is a direct and permanent identifier. Its inclusion in any dataset, even one that is otherwise de-identified, presents a profound privacy risk.

The convergence of these data streams within a single wellness app creates a perfect storm for potential re-identification. The very features that make these applications powerful tools for personal health optimization are the same features that make your “anonymized” data a fragile shield. The story your biology tells is simply too specific, and the tools to listen to that story are becoming more powerful every day.

Academic

A sophisticated analysis of re-identification risk moves beyond procedural descriptions into the mathematical and computational frameworks that govern data privacy. The foundational concept in traditional data anonymization is k-anonymity. A dataset is considered k-anonymous if, for any combination of quasi-identifying attributes, every record is indistinguishable from at least k-1 other records.

For example, in a 5-anonymous dataset, if an attacker knows a person’s age range, gender, and zip code, they would find at least five people in the dataset matching that description, theoretically preventing them from singling out their target. This model, while logically sound, is built on an assumption that proves to be its critical vulnerability ∞ that preventing identification is sufficient to protect privacy.

This assumption collapses in the face of two specific attacks. The first is the homogeneity attack. If the k individuals in an indistinguishable group all share the same sensitive attribute ∞ for instance, if all five people with a given zip code and age range also have the same medical diagnosis ∞ then an attacker who can identify the group has effectively learned the sensitive information of their target.

The second, more subtle vulnerability is the background knowledge attack. An attacker often possesses external information. If they know their target’s quasi-identifiers and also know something that narrows the possibilities for the sensitive attribute (e.g.

“my target has a very low risk for heart disease”), they can use this to eliminate possibilities within the k-anonymous group, potentially isolating their target’s true record. These limitations demonstrated that a more robust privacy definition was required, one that considered the diversity of the sensitive data itself.

From Anonymity to Diversity

This led to the development of l-diversity, a principle stating that every equivalence class (the group of k-indistinguishable records) must contain at least ‘l’ well-represented values for the sensitive attribute. This directly counters the homogeneity attack by ensuring there is ambiguity in the sensitive data.

However, l-diversity also has limitations. It can be difficult to achieve without significant data distortion, and it is vulnerable to skewness attacks. If one sensitive value in an l-diverse group is very common and the others are rare, an attacker can still infer information with high probability.

This led to a further refinement, t-closeness, which requires that the distribution of a sensitive attribute within any equivalence class be close (within a threshold ‘t’) to the attribute’s distribution in the overall dataset. Each of these models represents a step forward, but they are all attempts to patch a “release-and-forget” model of data sharing that is fundamentally challenged by high-dimensional data.

Differential privacy offers a mathematical guarantee of privacy by adding calibrated statistical noise, protecting individual data points from being isolated.

The modern and more mathematically rigorous approach is differential privacy. Differential privacy is a property of a query or algorithm, not a dataset. It provides a formal guarantee that the output of an analysis will be roughly the same, whether or not any single individual’s data is included in the input.

This is achieved by injecting a carefully calibrated amount of statistical noise into the results of a query. The amount of noise is controlled by a privacy parameter, epsilon (ε). A smaller epsilon provides more privacy (more noise) but less accuracy, while a larger epsilon provides more accuracy but less privacy.

This framework protects against linkage and differencing attacks because an attacker cannot learn anything meaningful about a specific individual by observing the output, as that individual’s contribution is drowned out by the statistical noise. It is the current gold standard in privacy-preserving data analysis, used by entities like the U.S. Census Bureau and Apple.

Which Privacy Models Are Most Robust?

The evolution of privacy models reflects an ongoing arms race between data utility and data security. The following table provides a comparative analysis of these key privacy frameworks, highlighting their progression and specific applications in the context of health data.

| Privacy Model | Core Principle | Strength | Weakness |

|---|---|---|---|

| k-Anonymity |

Each record is indistinguishable from at least k-1 others based on quasi-identifiers. |

Simple to conceptualize and implement. Prevents direct re-identification through linkage on quasi-identifiers. |

Vulnerable to homogeneity and background knowledge attacks. Does not protect the sensitive attributes themselves. |

| l-Diversity |

Each equivalence class must contain at least ‘l’ distinct sensitive values. |

Directly mitigates homogeneity attacks by ensuring diversity within the sensitive attribute column. |

Can be difficult to achieve and is vulnerable to skewness and similarity attacks. The semantic meaning of the data is not considered. |

| t-Closeness |

The distribution of the sensitive attribute in any equivalence class must be close to its distribution in the overall dataset. |

Protects against attribute disclosure by mitigating skewness and similarity attacks. Preserves statistical properties. |

Can be overly restrictive, leading to significant data utility loss. Computationally complex to implement effectively. |

| Differential Privacy |

The output of any analysis remains nearly identical with or without the inclusion of any single individual’s data. |

Provides a provable mathematical guarantee of privacy, resistant to linkage attacks based on future knowledge. |

Requires a trusted data curator. The utility-privacy trade-off (epsilon) must be carefully managed. Can reduce accuracy of results. |

The Endocrine System as a High-Dimensional Identifier

The ultimate challenge to anonymization comes from the nature of physiological data itself. From a systems biology perspective, the human body is a high-dimensional system. The data collected by a modern wellness app is a projection of this system’s state over time.

The endocrine system, in particular, functions as a master controller, creating complex, non-linear correlations between dozens of measurable outputs. The Hypothalamic-Pituitary-Adrenal (HPA), Hypothalamic-Pituitary-Gonadal (HPG), and Hypothalamic-Pituitary-Thyroid (HPT) axes do not operate in isolation. They are coupled systems. A perturbation in one, such as the administration of a Growth Hormone Peptide like Sermorelin or the natural fluctuations of perimenopause, creates ripples across the others.

This results in a time-series dataset with immense dimensionality and autocorrelation. Your heart rate variability is not independent of your sleep stages; both are influenced by your cortisol rhythm. Your glucose response is not independent of your menstrual cycle; both are influenced by estrogen and progesterone.

When an attacker has access to multiple, synchronous data streams from a single individual, they are not just looking at a list of numbers. They are observing a dynamic system. Re-identification becomes a problem analogous to system identification in engineering ∞ given a set of outputs, can one infer the unique parameters of the system that generated them?

Research has shown that very short segments of physiological data, capturing these unique systemic interactions, are sufficient for accurate re-identification. The more data streams an app collects ∞ from movement to sleep to metabolism ∞ the more completely it captures the state of your unique biological system, and the more indelible your signature becomes. This intrinsic, high-dimensional uniqueness of human physiology is the final and most formidable barrier to true, lasting anonymization.

References

- Rocher, L. Hendrickx, J. M. & de Montjoye, Y. A. (2019). Estimating the success of re-identifications in incomplete datasets using generative models. Nature Communications, 10(1), 3069.

- Shokri, R. & Shmatikov, V. (2015). Privacy-Preserving Deep Learning. In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security (pp. 1310-1321).

- Machanavajjhala, A. Kifer, D. Gehrke, J. & Venkitasubramaniam, M. (2007). l-Diversity ∞ Privacy Beyond k-Anonymity. ACM Transactions on Knowledge Discovery from Data, 1(1), 3.

- El Emam, K. & Dankar, F. K. (2014). Practicing differential privacy in health care ∞ a review. Journal of the American Medical Informatics Association, 21(e1), e12-e21.

- Na, L. Yang, C. Lo, C. C. Zhao, F. Fukuoka, Y. & Vittinghoff, E. (2018). Feasibility of re-identifying individuals in large national physical activity data sets from wearable sensors. JAMA network open, 1(8), e186040-e186040.

Reflection

The information presented here provides a map of the complex territory where your personal health data meets the digital world. You began this exploration with a question about anonymity, and you now possess a deeper scientific vocabulary to frame that question.

You understand that your body’s data tells a unique and intricate story, one whose authorship is difficult to erase. You see the mechanisms through which this story can be traced and the mathematical frameworks designed to protect it. This knowledge is the foundational element of true agency over your personal information.

Where Does Your Personal Health Journey Go from Here?

This understanding is not an end point. It is a new beginning. It shifts the conversation from a simple “yes or no” on data sharing to a more sophisticated set of considerations. How is this data protected? What privacy models are in place? What is the specific value proposition for sharing this intimate biological narrative?

The answers will vary, and your comfort level is a personal calibration. The path forward involves holding two concepts in balance ∞ the immense potential of personalized data to optimize your health and the profound importance of safeguarding your digital self. Your biology is unique. Your health journey is your own. The decisions you make about the data that describes them should be equally personal and just as informed.

Glossary

anonymization

health data

data privacy

hipaa

endocrine system

menstrual cycle

heart rate variability

wellness app

pseudonymization

physiological data

linkage attack

quasi-identifiers

linkage attacks

re-identification risk

k-anonymity

l-diversity