Fundamentals

The landscape of personal well-being frequently prompts individuals to consider sharing intimate physiological details, often with the promise of optimized health protocols. We often find ourselves contemplating the security of our most personal health information, especially when engaging with wellness programs that promise insights into our unique biological rhythms.

This inherent desire for privacy, particularly when discussing the intricate workings of our internal systems, stands as a valid and deeply human concern. Understanding the journey of your biological data, from collection to its potential use, offers a foundational step toward reclaiming vitality and informed engagement.

When a wellness program collects health data, a process known as anonymization typically occurs. This procedure involves removing or masking direct identifiers, such as names and addresses, from datasets. The intention is to safeguard individual privacy while still enabling the data’s utility for broader analysis and research. However, true and permanent anonymity presents a complex challenge, particularly when dealing with the highly specific and interconnected nature of human biology.

Anonymization seeks to protect individual privacy by removing direct identifiers from health data, allowing for broader analytical utility.

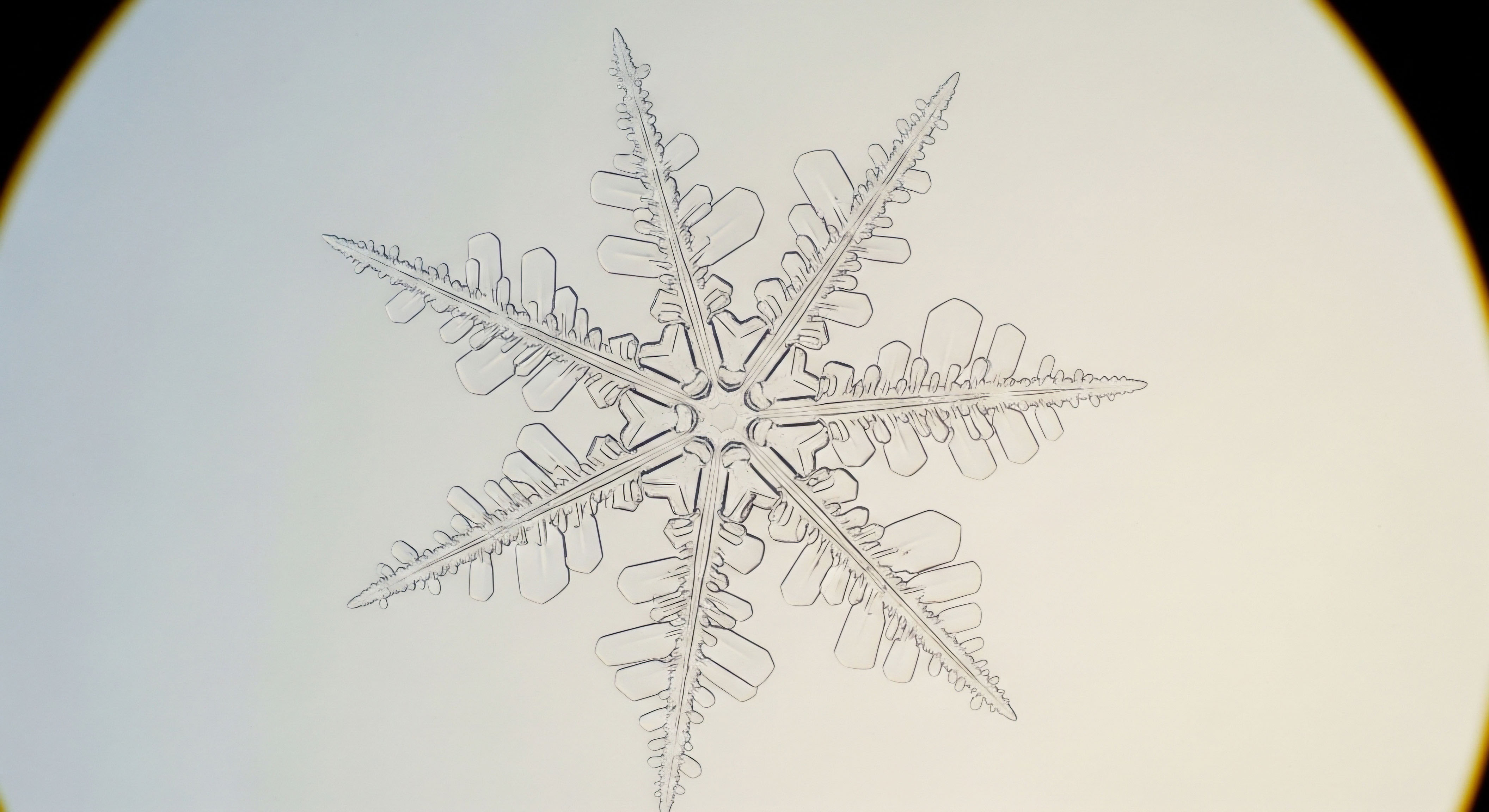

The endocrine system, a sophisticated network of glands and hormones, orchestrates nearly every bodily function, from metabolism to mood, growth to reproduction. The data reflecting this system, encompassing hormone levels, metabolic markers, and even genetic predispositions, forms a unique biological signature for each individual. This signature, even when seemingly stripped of personal identifiers, possesses a distinctiveness that challenges conventional notions of data privacy. Each person’s hormonal profile, a complex interplay of biochemical signals, inherently carries a high degree of specificity.

What Constitutes Health Data Anonymization?

Health data anonymization involves several techniques designed to obscure individual identities within a dataset. Generalization replaces specific data points with broader categories, for example, converting an exact age into an age range. Perturbation adds statistical noise to data, slightly altering values while preserving overall statistical patterns.

Suppression entails removing certain sensitive data points entirely. Masking substitutes real sensitive data with fictional yet statistically equivalent information. These methods collectively aim to create a dataset usable for analysis without revealing the identities of the individuals contributing the data.

Despite these efforts, the highly granular nature of hormonal and metabolic information gathered in wellness programs introduces a persistent layer of complexity. The unique constellation of biomarkers, even when generalized, can still coalesce into a pattern recognizable as belonging to a specific individual, especially when combined with other readily available public information. The inherent uniqueness of our physiological makeup thus poses a fundamental question regarding the absolute and enduring anonymity of health data.

Intermediate

The quest for true data anonymity in wellness programs navigates a landscape filled with both technical advancements and persistent vulnerabilities. While initial anonymization methods aim to de-link data from individuals, the re-identification of seemingly anonymous health information remains a significant and documented risk. This re-identification often stems from the ability to cross-reference masked datasets with publicly available records or by combining a limited set of personal attributes, known as quasi-identifiers.

How Re-Identification Occurs with Hormonal Data?

Linkage attacks represent a primary method for re-identifying individuals. Adversaries combine anonymized datasets with external public records, such as voter registration databases or consumer profiles, which may contain overlapping quasi-identifiers like birthdate, gender, and geographic location. A study revealed that advanced algorithms can re-identify a substantial percentage of individuals using these methods.

For instance, a particular combination of an individual’s age range, general location, and a specific metabolic marker, such as elevated fasting insulin or a precise testosterone level, could become sufficiently unique to pinpoint that individual within a larger, “anonymized” dataset when correlated with other public information.

Re-identification risks persist due to linkage attacks, where seemingly anonymous health data combines with public records to reveal identities.

Wellness programs frequently gather highly sensitive biometric and health risk assessment data, often through devices like fitness trackers or detailed surveys. This data, which includes intricate hormonal profiles and metabolic indicators, can possess a profound level of specificity.

For instance, a participant in a testosterone optimization protocol might have a unique combination of weekly testosterone cypionate dosage, gonadorelin administration frequency, and anastrozole usage, alongside specific lab values for total and free testosterone, estradiol, and luteinizing hormone. Even if these details are generalized, the sheer uniqueness of such a multi-faceted protocol creates an identifiable “endocrine signature.”

- Generalization ∞ Replacing exact values with broader categories, like age ranges or generalized geographical areas.

- Perturbation ∞ Introducing minor, random alterations to data points to obscure their precision.

- Suppression ∞ Omitting highly unique or sensitive data fields entirely from the dataset.

- Masking ∞ Substituting original sensitive data with fictional equivalents that maintain statistical properties.

The regulatory landscape surrounding wellness program data can also introduce vulnerabilities. Many wellness programs operate outside the stringent protections of the Health Insurance Portability and Accountability Act (HIPAA), particularly if they are not directly tied to an employer’s group health plan.

This regulatory gap means that vendors might share employee health information with various third parties, often under privacy policies that lack transparent explanations of data usage and retention. The absence of comprehensive oversight increases the risk of re-identification, as data may traverse numerous entities without consistent privacy safeguards.

Do Current Anonymization Methods Suffice for Hormonal Data?

The efficacy of traditional anonymization techniques diminishes significantly when applied to the rich, multi-dimensional data characteristic of hormonal health and metabolic function. A specific protocol, such as a female hormone balance regimen involving low-dose testosterone cypionate injections combined with progesterone, alongside unique symptoms like irregular cycles or mood changes, forms a highly distinct data pattern.

When this intricate pattern exists within a smaller dataset, or when combined with even a few demographic quasi-identifiers, the probability of re-identification escalates considerably. The inherent complexity and individuality of the endocrine system present an ongoing challenge to achieving truly irreversible data anonymity.

Consider the data from peptide therapies. An individual using Sermorelin for growth hormone support, alongside PT-141 for sexual health, and Pentadeca Arginate for tissue repair, generates a highly specific therapeutic profile. This profile, when linked with other health markers, can become a unique identifier. The goal of anonymization, therefore, often clashes with the desire to retain data utility for meaningful research and personalized wellness insights.

| Technique | Description | Re-identification Risk | Data Utility Impact |

|---|---|---|---|

| Generalization | Replacing specific values with broader categories (e.g. age ranges). | Moderate to High, susceptible to linkage attacks. | Moderate reduction, precision lost. |

| Perturbation | Adding random noise to data to slightly alter values. | Moderate, depending on noise level. | Variable, higher noise reduces utility. |

| Suppression | Removing unique or sensitive attributes entirely. | Lower, but can reduce dataset completeness. | High reduction for specific analyses. |

| Masking | Substituting real data with synthetic, fictional equivalents. | Low to Moderate, if not statistically robust. | Variable, dependent on masking quality. |

Academic

The aspiration for absolute and permanent anonymity in health data, particularly within the context of nuanced physiological profiles from wellness programs, encounters significant theoretical and practical impediments. Contemporary understanding of data privacy acknowledges that “anonymity” functions as a dynamic continuum, not a static state, constantly challenged by advances in computational power and data linkage methodologies. The inherent complexity of biological data, reflecting the intricate dance of the endocrine system, renders traditional anonymization techniques increasingly vulnerable to sophisticated re-identification attempts.

Unmasking the Endocrine Signature through Advanced Analytics

The re-identification risk escalates when considering the multi-omic nature of modern health data. Wellness programs often collect a rich tapestry of information, encompassing not only basic demographics but also detailed biometric screenings, genetic markers, microbiome data, and comprehensive hormonal panels.

This granular data, even when stripped of direct identifiers, forms a highly dimensional space where unique patterns emerge. A specific individual’s hormonal trajectory, perhaps characterized by a unique diurnal cortisol rhythm, a particular thyroid hormone ratio, or a response curve to a peptide therapy, can serve as a potent quasi-identifier.

When combined with even a few demographic data points, such as a generalized age group or a regional ZIP code, this “endocrine signature” becomes statistically improbable to match multiple individuals, making re-identification feasible.

Multi-omic health data, including unique hormonal trajectories, can create identifiable endocrine signatures despite anonymization.

The statistical challenge of achieving robust anonymity in high-dimensional datasets is profound. Researchers have demonstrated that with a sufficient number of seemingly innocuous attributes, the probability of re-identifying an individual approaches certainty. For example, a 2019 study indicated that 99.98% of Americans could be correctly re-identified in any dataset using just 15 demographic attributes.

The inclusion of highly specific physiological data, such as a unique combination of growth hormone peptide therapy (e.g. Ipamorelin/CJC-1295 dosage and frequency) alongside a particular metabolic panel, significantly reduces the ‘crowd size’ within which an individual can hide, thereby diminishing the effectiveness of k-anonymity-based approaches.

Differential Privacy and Synthetic Data as Advanced Safeguards

Addressing these challenges requires a shift towards more mathematically rigorous privacy-preserving mechanisms. Differential privacy stands as a robust framework that introduces controlled noise into data queries or datasets, ensuring that the presence or absence of any single individual’s data does not significantly alter the analytical outcome.

This approach offers a quantifiable privacy guarantee, often expressed through an epsilon parameter, which dictates the trade-off between privacy and data utility. Implementing differential privacy for complex endocrine data demands careful calibration of this epsilon, balancing the need to obscure individual contributions with the imperative to preserve the nuanced biological relationships necessary for clinical insights.

Synthetic data generation presents another promising avenue. This method involves creating entirely artificial datasets that statistically mimic the properties and patterns of real health data without containing any actual patient records. Advanced machine learning techniques, such as Generative Adversarial Networks (GANs), can produce synthetic datasets that replicate complex biological distributions, including those found in hormonal and metabolic profiles.

This allows for extensive research, algorithm training, and model development without directly exposing sensitive patient information. The utility of synthetic data for personalized wellness protocols is substantial, enabling the testing of new therapeutic strategies, such as optimizing TRT dosages or peptide combinations, in a privacy-preserving environment.

Balancing Utility and Privacy in Endocrine Research

The application of these advanced techniques to endocrine data offers a pathway for research and innovation in personalized wellness while upholding privacy. For instance, synthetic datasets can train AI models to predict optimal hormonal optimization protocols for men experiencing hypogonadism, considering factors like testosterone cypionate, gonadorelin, and anastrozole usage, without ever touching real patient data.

Similarly, synthetic data can simulate outcomes for women on low-dose testosterone and progesterone regimens, aiding in the refinement of dosage strategies for conditions like perimenopause. The precision of these models, however, depends on the fidelity of the synthetic data to the original biological distributions, representing an ongoing area of research and refinement.

The interconnectedness of the hypothalamic-pituitary-gonadal (HPG) axis, the hypothalamic-pituitary-adrenal (HPA) axis, and metabolic pathways means that a single hormonal data point rarely exists in isolation. Alterations in one hormone, such as cortisol from the HPA axis, can influence gonadotropin-releasing hormone (GnRH) pulsatility and subsequent testosterone production.

This intricate web of interactions implies that even subtle patterns across various seemingly anonymized data points can collectively reveal a highly specific individual profile. Truly anonymizing such interconnected, high-resolution biological data necessitates a deep understanding of these systemic interdependencies to prevent re-identification through inference.

| Technique | Core Mechanism | Privacy Guarantee | Data Utility for Endocrine Health |

|---|---|---|---|

| Differential Privacy | Adds calibrated noise to data or query results. | Mathematically provable, strong against sophisticated attacks. | Preserves aggregate trends, less precise for individual-level insights. |

| Synthetic Data Generation | Creates artificial datasets mimicking statistical properties. | High, no direct link to real individuals. | Excellent for model training, testing, and simulating complex biological interactions. |

What Are the Long-Term Implications for Data Privacy in Personalized Wellness?

The trajectory of personalized wellness, driven by increasingly sophisticated data collection and analytical capabilities, necessitates a continuous re-evaluation of data anonymity. The challenge extends beyond technical solutions to encompass ethical frameworks and regulatory agility. As our understanding of human physiology deepens, and as wellness programs collect more granular and interconnected biological data, the potential for unintended re-identification increases.

This demands a proactive stance from individuals and institutions, fostering a culture of transparency and accountability regarding data stewardship. The ongoing dialogue between scientific advancement and individual autonomy shapes the future of health data privacy.

References

- Sweeney, Latanya. “k-Anonymity ∞ A Model for Protecting Privacy.” International Journal on Uncertainty, Fuzziness and Knowledge-Based Systems, vol. 10, no. 5, 2002, pp. 557-570.

- Rocher, Luc, Julien Hendrickx, and Yves-Alexandre de Montjoye. “Estimating the success of re-identification in incomplete datasets using generative models.” Nature Communications, vol. 10, no. 1, 2019, p. 3069.

- Dwork, Cynthia. “Differential Privacy ∞ A Survey of Results.” International Conference on Automata, Languages and Programming, Springer, Berlin, Heidelberg, 2008, pp. 1-19.

- Ohno-Machado, Lucila, et al. “Data anonymization and de-identification in the biomedical literature ∞ A scoping review.” Journal of Medical Internet Research, vol. 21, no. 5, 2019, e12044.

- Li, Ning, Tiancheng Li, and Suresh Venkatasubramanian. “t-Closeness ∞ Privacy Beyond k-Anonymity and l-Diversity.” 2007 IEEE 23rd International Conference on Data Engineering, IEEE, 2007, pp. 106-115.

- Guyton, Arthur C. and John E. Hall. Textbook of Medical Physiology. 13th ed. Elsevier, 2016.

- Dwork, Cynthia, and Aaron Roth. “The Algorithmic Foundations of Differential Privacy.” Foundations and Trends in Theoretical Computer Science, vol. 9, no. 3-4, 2014, pp. 211-407.

- Abadi, Martin, et al. “Deep Learning with Differential Privacy.” Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, ACM, 2016, pp. 308-318.

- Bowes, Janet B. and Brenda Dervin. The Role of Context in Information Seeking and Use. Annual Review of Information Science and Technology, vol. 19, 1984, pp. 281-314.

Reflection

Understanding the intricate relationship between your biological systems and the digital footprint of your health data represents a significant step on your personal wellness path. The knowledge gained regarding data anonymization and its limitations provides a foundation for more informed decisions.

Your health journey, with its unique physiological nuances, requires an engaged perspective on how personal information is managed. Moving forward, consider this understanding a powerful tool, guiding your interactions with wellness programs and empowering you to seek protocols that honor both your aspirations for vitality and your right to privacy.